The learning dataset I'm using is a grayscale image that was flatten to have each pixel representing an individual sample. The second image will be classified pixel by pixel after training the Multilayer perceptron (MLP) classifier on the former one.

The problem I have is that the MLP is performing better when it receives the training dataset all at once (fit()) compared to when it is trained by chunks (partial_fit()). I'm keeping the default parameters provided by Scikit-learn in both cases.

I'm asking this question because when the training dataset is in the order of millions of samples, I will have to employ partial_fit() to train the MLP by chunks.

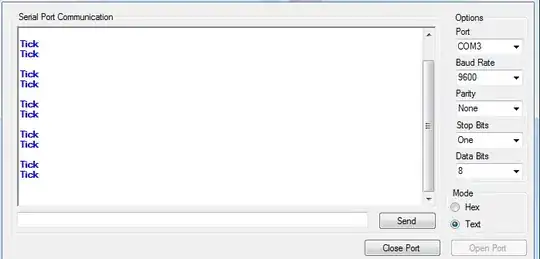

def batcherator(data, target, chunksize):

for i in range(0, len(data), chunksize):

yield data[i:i+chunksize], target[i:i+chunksize]

def classify():

classifier = MLPClassifier(verbose=True)

# classifier.fit(training_data, training_target)

gen = batcherator(training.data, training.target, 1000)

for chunk_data, chunk_target in gen:

classifier.partial_fit(chunk_data, chunk_target,

classes=np.array([0, 1]))

predictions = classifier.predict(test_data)

My question is which parameters should I adjust in the MLP classifier to make its results more acceptable when it's trained by chunks of data?

I've tried to increase the number of neurons in the hidden layer using hidden_layer_sizes but I didn't see any improvement. No improvement either if I change the activation function of the hidden layer from the default relu to logistic using the activation parameter.

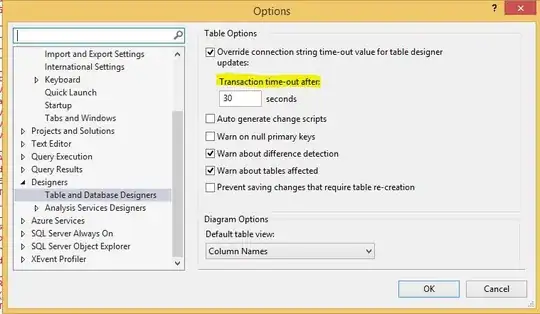

Below are the images I'm working on (all of them are 512x512 images) with a link to the Google Fusion table where they were exported as CSV from the numpy arrays (to leave the image as a float instead of an int):

Training_data:

The white areas are masked out: Google Fusion Table (training_data)

Class0:

Class1:

Training_target:

Google Fusion Table (training_target)

Test_data:

Google Fusion Table (test_data)

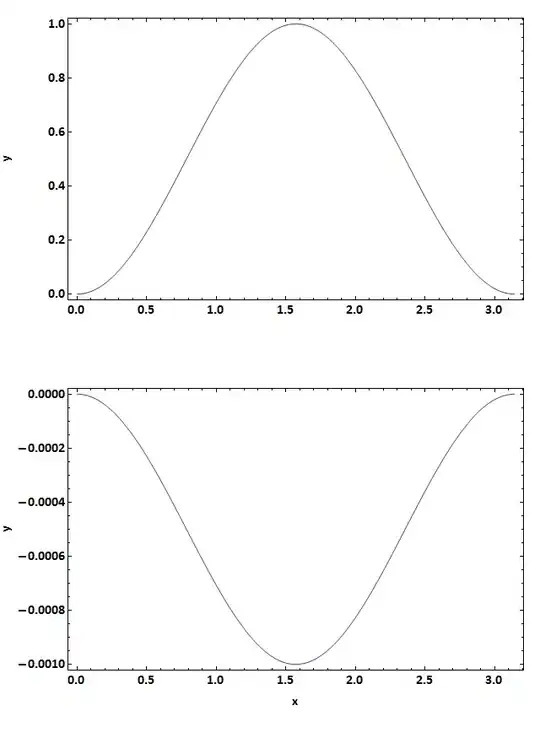

Prediction (with partial_fit):