I'm confused about how to get the best from dask.

The problem

I have a dataframe which contains several timeseries (every one has its own key) and I need to run a function my_fun on every each of them. One way to solve it with pandas involves

df = list(df.groupby("key")) and then apply my_fun

with multiprocessing. The performances, despite the huge usage of RAM, are pretty good on my machine and terrible on google cloud compute.

On Dask my current workflow is:

import dask.dataframe as dd

from dask.multiprocessing import get

- Read data from S3. 14 files -> 14 partitions

- `df.groupby("key").apply(my_fun).to_frame.compute(get=get)

As I didn't set the indices df.known_divisions is False

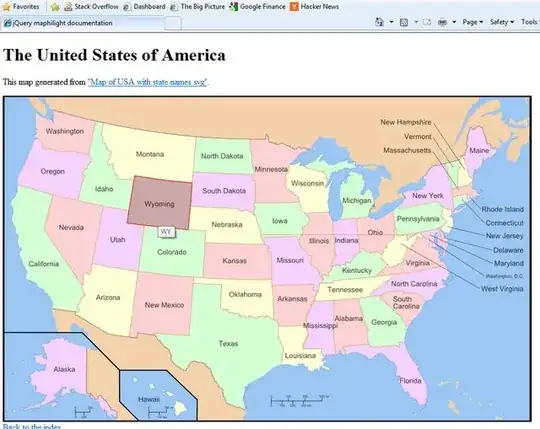

The resulting graph is

and I don't understand if what I see it is a bottleneck or not.

and I don't understand if what I see it is a bottleneck or not.

Questions:

- Is it better to have

df.npartitionsas a multiple ofncpuor it doesn't matter? From this it seems that is better to set the index as key. My guess is that I can do something like

df["key2"] = df["key"] df = df.set_index("key2")

but, again, I don't know if this is the best way to do it.