Is it possible to pass parameters to Airflow's jobs through UI?

AFAIK, 'params' argument in DAG is defined in python code, therefore it can't be changed at runtime.

Is it possible to pass parameters to Airflow's jobs through UI?

AFAIK, 'params' argument in DAG is defined in python code, therefore it can't be changed at runtime.

Depending on what you're trying to do, you might be able to leverage Airflow Variables. These can be defined or edited in the UI under the Admin tab. Then your DAG code can read the value of the variable and pass the value to the DAG(s) it creates.

Note, however, that although Variables let you decouple values from code, all runs of a DAG will read the same value for the variable. If you want runs to be passed different values, your best bet is probably to use airflow templating macros and differentiate macros with the run_id macro or similar

Two ways to change your DAG behavior:

UI - manual trigger from tree view

UI - create new DAG run from browse > DAG runs > create new record

UI - create new DAG run from browse > DAG runs > create new record

or from

CLI

airflow trigger_dag 'MY_DAG' -r 'test-run-1' --conf '{"exec_date":"2021-09-14"}'

Within the DAG this JSON can be accessed using jinja templates or in the operator callable function context param.

def do_some_task(**context):

print(context['dag_run'].conf['exec_date'])

task1 = PythonOperator(

task_id='task1_id',

provide_context=True,

python_callable=do_some_task,

dag=dag,

)

#access in templates

task2 = BashOperator(

task_id="task2_id",

bash_command="{{ dag_run.conf['exec_date'] }}",

dag=dag,

)

Note that the JSON conf will not be present during scheduled runs. The best use case for JSON conf is to override the default DAG behavior. Hence set meaningful defaults in the DAG code so that during scheduled runs JSON conf is not used.

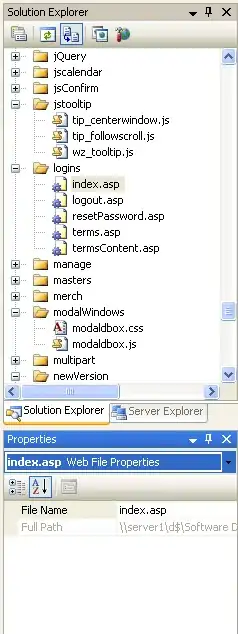

It is possible to improve the usability of the Answer by ns15 by building a user interface within Airflow web. Airflow's interface can be expanded with plugins, for instance web views. Plugins are saved in the Airflow plugins folder, normally $AIRFLOW_HOME/plugins

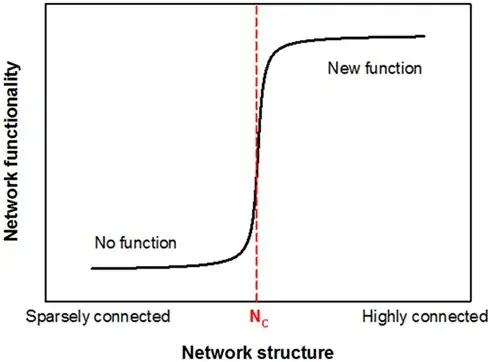

A full example is given here, where this UI image has been found:

A form builder will be built-in in Airflow 2.6.0, without the need for a plugin, thanks to AIP-50 (Airflow Improvement Proposal 50).

Sample views: