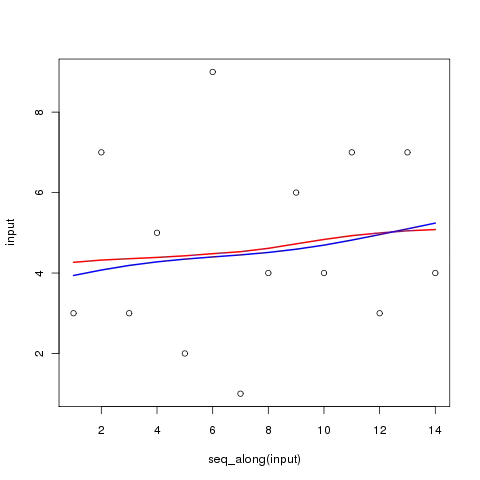

Here is my dataframe:

The underlying RDD has 2 partitions

When I do a df.count, the DAG produced is

When I do a df.rdd.count, the DAG produced is:

Ques: Count is an action in spark, the official definition is ‘Returns the number of rows in the DataFrame.’. Now, when I perform the count on the dataframe why is a shuffle occurring? Besides, when I do the same on the underlying RDD no shuffle occurs.

It makes no sense to me why a shuffle would occur anyway. I tried to go through the source code of count here spark github But it doesn’t make sense to me fully. Is the “groupby” being supplied to the action the culprit?

PS. df.coalesce(1).count does not cause any shuffle