I'm trying to make tensorflow mfcc give me the same results as python lybrosa mfcc i have tried to match all the default parameters that are used by librosa in my tensorflow code and got a different result

this is the tensorflow code that i have used :

waveform = contrib_audio.decode_wav(

audio_binary,

desired_channels=1,

desired_samples=sample_rate,

name='decoded_sample_data')

sample_rate = 16000

transwav = tf.transpose(waveform[0])

stfts = tf.contrib.signal.stft(transwav,

frame_length=2048,

frame_step=512,

fft_length=2048,

window_fn=functools.partial(tf.contrib.signal.hann_window,

periodic=False),

pad_end=True)

spectrograms = tf.abs(stfts)

num_spectrogram_bins = stfts.shape[-1].value

lower_edge_hertz, upper_edge_hertz, num_mel_bins = 0.0,8000.0, 128

linear_to_mel_weight_matrix =

tf.contrib.signal.linear_to_mel_weight_matrix(

num_mel_bins, num_spectrogram_bins, sample_rate, lower_edge_hertz,

upper_edge_hertz)

mel_spectrograms = tf.tensordot(

spectrograms,

linear_to_mel_weight_matrix, 1)

mel_spectrograms.set_shape(spectrograms.shape[:-1].concatenate(

linear_to_mel_weight_matrix.shape[-1:]))

log_mel_spectrograms = tf.log(mel_spectrograms + 1e-6)

mfccs = tf.contrib.signal.mfccs_from_log_mel_spectrograms(

log_mel_spectrograms)[..., :20]

the equivalent in librosa: libr_mfcc = librosa.feature.mfcc(wav, 16000)

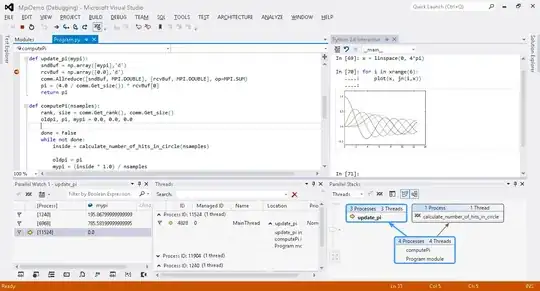

the following are the graphs of the results: