Consider the following program:

#include <iostream>

#include <array>

#include <unistd.h>

using clock_value_t = long long;

__device__ void gpu_sleep(clock_value_t sleep_cycles)

{

clock_value_t start = clock64();

clock_value_t cycles_elapsed;

do { cycles_elapsed = clock64() - start; }

while (cycles_elapsed < sleep_cycles);

}

__global__ void dummy(clock_value_t duration_in_cycles)

{

gpu_sleep(duration_in_cycles);

}

int main()

{

const clock_value_t duration_in_clocks = 1e7;

const size_t buffer_size = 2e7;

constexpr const auto num_streams = 8;

std::array<char*, num_streams> host_ptrs;

std::array<char*, num_streams> device_ptrs;

std::array<cudaStream_t, num_streams> streams;

for (auto i=0; i<num_streams; i++) {

cudaMallocHost(&host_ptrs[i], buffer_size);

cudaMalloc(&device_ptrs[i], buffer_size);

cudaStreamCreateWithFlags(&streams[i], cudaStreamNonBlocking);

}

cudaDeviceSynchronize();

for (auto i=0; i<num_streams; i++) {

cudaMemcpyAsync(device_ptrs[i], host_ptrs[i], buffer_size, cudaMemcpyDefault, streams[i]);

dummy<<<128, 128, 0, streams[i]>>>(duration_in_clocks);

cudaMemcpyAsync(host_ptrs[i], device_ptrs[i], buffer_size, cudaMemcpyDefault, streams[i]);

}

usleep(50000);

for (auto i=0; i<num_streams; i++) { cudaStreamSynchronize(streams[i]); }

for (auto i=0; i<num_streams; i++) {

cudaFreeHost(host_ptrs[i]);

cudaFree(device_ptrs[i]);

}

}

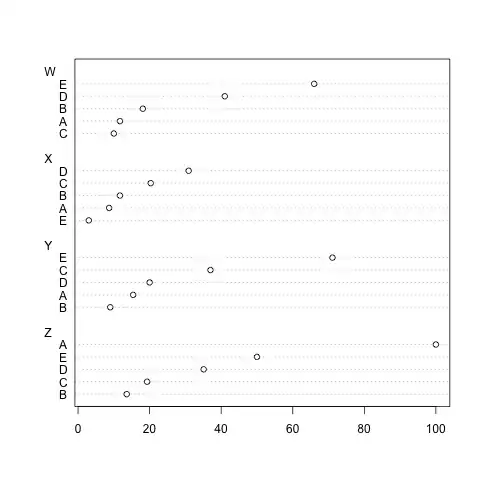

I'm running it on an GTX Titan X, with CUDA 8.0.61, on Fedora 25, with driver 375.66. The timeline I'm seeing is this:

There a few things wrong with this picture:

- As far as I can recall there can only be one HtoD transfer at a time.

- All of the memory transfers should take basically the same amount of time - they're of the same amount of data; and there's nothing else interesting going on with the PCIe bus to affect transfer rates so much.

- Some DtoH bars like like they're stretched out until something happens on another stream.

- There's this huge gap in which there seems to be no Computer and no real I/O. And even if the DtoH for all previously-completed kernels was to occupy that gap, that would still leave a very significant amount of time. That actually looks like a scheduling issue rather than a profiling error.

So, how should I interpret this timeline? And where does the problem lie? (Hopefully not with the programmer...)

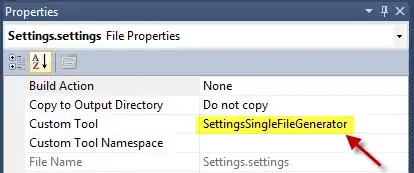

I should mention that with less streams (e.g. 2) the timeline looks very nice on the same SW+HW: