I am trying to improve my Spark Scala skills and I have this case which I cannot find a way to manipulate so please advise!

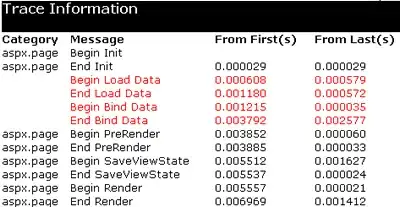

I have original data as it shown in the figure bellow:

I want to calculate the percentage of every result of the count column . E.g. the last error value is 64 how much is 64 as a percentage out of the all column values. Please note that I am reading the original data as Dataframes using sqlContext: Here is my code:

val df1 = df.groupBy(" Code")

.agg(sum("count").alias("sum"), mean("count")

.multiply(100)

.cast("integer").alias("percentage"))

I want results similar to this:

Thanks in advance!