I am trying to calculate the error rate of the training data I'm using.

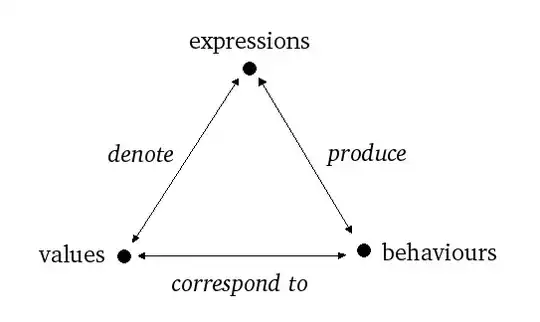

I believe I'm calculating the error incorrectly. The formula is as shown:

y is calculated as shown:

I am calculating this in the function fitPoly(M) at line 49. I believe I am incorrectly calculating y(x(n)), but I don't know what else to do.

Below is the Minimal, Complete, and Verifiable example.

import numpy as np

import matplotlib.pyplot as plt

dataTrain = [[2.362761180904257019e-01, -4.108125266714775847e+00],

[4.324296163702689988e-01, -9.869308732049049127e+00],

[6.023323504115264404e-01, -6.684279243433971729e+00],

[3.305079685397107614e-01, -7.897042003779912278e+00],

[9.952423271981121200e-01, 3.710086310489402628e+00],

[8.308127402955634011e-02, 1.828266768673480147e+00],

[1.855495407116576345e-01, 1.039713135916495501e+00],

[7.088332047815845138e-01, -9.783208407540947560e-01],

[9.475723071629885697e-01, 1.137746192425550085e+01],

[2.343475721257285427e-01, 3.098019704040922750e+00],

[9.338350584099475160e-02, 2.316408265530458976e+00],

[2.107903139601833287e-01, -1.550451474833406396e+00],

[9.509966727520677843e-01, 9.295029459100994984e+00],

[7.164931165416982273e-01, 1.041025972594300075e+00],

[2.965557300301902011e-03, -1.060607693351102121e+01]]

def strip(L, xt):

ret = []

for i in L:

ret.append(i[xt])

return ret

x1 = strip(dataTrain, 0)

y1 = strip(dataTrain, 1)

# HELP HERE

def getY(m, w, D):

y = w[0]

y += np.sum(w[1:] * D[:m])

return y

# HELP ABOVE

def dataMatrix(X, M):

Z = []

for x in range(len(X)):

row = []

for m in range(M + 1):

row.append(X[x][0] ** m)

Z.append(row)

return Z

def fitPoly(M):

t = []

for i in dataTrain:

t.append(i[1])

w, _, _, _ = np.linalg.lstsq(dataMatrix(dataTrain, M), t)

w = w[::-1]

errTrain = np.sum(np.subtract(t, getY(M, w, x1)) ** 2)/len(x1)

print('errTrain: %s' % (errTrain))

return([w, errTrain])

#fitPoly(8)

def plotPoly(w):

plt.ylim(-15, 15)

x, y = zip(*dataTrain)

plt.plot(x, y, 'bo')

xw = np.arange(0, 1, .001)

yw = np.polyval(w, xw)

plt.plot(xw, yw, 'r')

#plotPoly(fitPoly(3)[0])

def bestPoly():

m = 0

plt.figure(1)

plt.xlim(0, 16)

plt.ylim(0, 250)

plt.xlabel('M')

plt.ylabel('Error')

plt.suptitle('Question 3: training and Test error')

while m < 16:

plt.figure(0)

plt.subplot(4, 4, m + 1)

plotPoly(fitPoly(m)[0])

plt.figure(1)

plt.plot(fitPoly(m)[1])

#plt.plot(fitPoly(m)[2])

m+= 1

plt.figure(3)

plt.xlabel('t')

plt.ylabel('x')

plt.suptitle('Question 3: best-fitting polynomial (degree = 8)')

plotPoly(fitPoly(8)[0])

print('Best M: %d\nBest w: %s\nTraining error: %s' % (8, fitPoly(8)[0], fitPoly(8)[1], ))

bestPoly()