Are there any hidden gotchas here? Even popular ReLU is max(0,x) where we will allow the maximum value to pass and clip the negative as zero. What is the problem if we allow both positive and negative values? Or why are we clipping the negative values to zero in ReLU.

3 Answers

Consecutive layers mean superposition in the functional sense:

x -> L1(x) -> L2(L1(x)) -> ...

For an input x it produces L2(L1(x)) or a composition of L1 and L2.

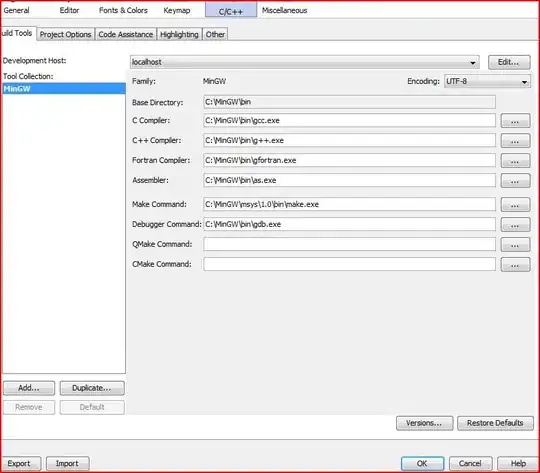

The composition of two linear layers is effectively one big linear layer, which doesn't make the model any better. Any non-linearity applied to the layers, even as simple one as ReLu, splits the space and, thus, allows to learn complex functions. You can see this effect of 4 ReLu's on the picture:

Of course, you can have just one non-linearity in the output layer. But this basically means the simplest neural network with one hidden layer and one activation function. It's true that it can approximate any function, but using exponentially many neurons in the hidden layer. Adding depth to the network allows you to get very complex representations and have relatively small number of neurons. This is where deep learning comes into play.

- 52,561

- 27

- 155

- 209

Negative values are allowed. There are special cases of RELu when you can use negative values.

"Clipping" in classic RELu is because of nonlinearity requirement. Without "clipping" classic RELu will be linear unit, such unit will be unable "catch" nonlinear dependency between input and output.

- 835

- 12

- 18

Activation functions are the ones that make your network "non-linear".

To illustrate what I mean, consider this example where there is one input layer, 2 hidden layers, and 1 output layer (with a full set of weights and biases). The output at the end of the network, if there are no activation functions will be:

y = w1x+b1 + w2x+b2 = (w1+w2)x+ (b1+b2) = Wx + B

As you can see, without activation functions, the network simply becomes linear aka output depends linearly on the input features.

Whereas, say you have an activation function in the middle, and consider for simplicity sake that its a sigmoid function and not a ReLU. Think how the above equation would be. It would definitely be non-linear and definitely depend on the various combinations of the inputs.

Now for the case of why we use ReLUs, simply put, it is one hyperparameter that helps the output converge much quickly. The reasoning is very interesting and I'm afraid is out of the scope of this question. Do read up on it though.

- 722

- 6

- 18

-

I Understand why activations are needed and Why ReLU is helpful in converging. What I am asking is to introduce nonlinearity only at output layer ? As you said "without activation functions, the network simply becomes linear aka output depends linearly on the input features". I feel its the same case when you use ReLU – Sumith Oct 10 '17 at 08:23

-

Adding non linearity only to the output layer makes it behave as if all the hidden layers are compressed to one layer. You can do it, there's nothing "wrong" in doing that, but just expect the accuracy to be way less. I guess why you are confused about ReLUs is because you are thinking of it in terms of a forward pass only. Think how it behaves in the backward pass and how it moves around the gradients and then maybe you will get a better intuition of why we need non-linearity in every layer for better learning. – Vj- Oct 10 '17 at 09:23

-

Correct me if I am wrong, While backprop gradient vector will be multiplied with derivative of activation function the derviative of ReLU is 1, Even if I do not use ReLU then also derivative will be 1 only.So it wont be an issue while backprop I guess. – Sumith Oct 10 '17 at 09:45

-

Ah, I apparently misunderstood your question. I believe [this CrossValidated question](https://stats.stackexchange.com/questions/141960/deep-neural-nets-relus-removing-non-linearity) answers your question exactly. – Vj- Oct 10 '17 at 11:26