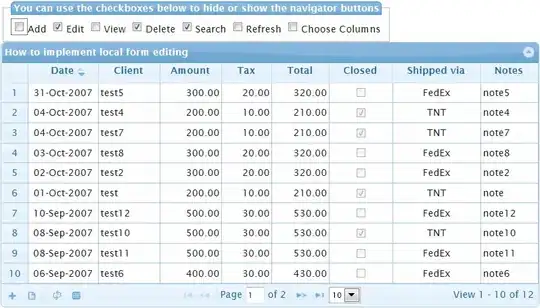

Given the install date of users, I would like to get the Firebase (1) Event Occurrences and (2) Event Distinct Users' Count for all our 200+ Firebase events on Day0 to Day30. I simulated the output table below (for D0-D30) in a screenshot, but the code is only for Day0-Day7.

(1) Event Occurrences

SELECT

event.name as event_name,

COUNT(CASE WHEN _TABLE_SUFFIX >= '20170801' AND _TABLE_SUFFIX < '20170802' THEN event_count END) AS D0_USERS,

COUNT(CASE WHEN _TABLE_SUFFIX >= '20170802' AND _TABLE_SUFFIX < '20170803' THEN event_count END) AS D1_USERS,

COUNT(CASE WHEN _TABLE_SUFFIX >= '20170803' AND _TABLE_SUFFIX < '20170804' THEN event_count END) AS D2_USERS,

COUNT(CASE WHEN _TABLE_SUFFIX >= '20170804' AND _TABLE_SUFFIX < '20170805' THEN event_count END) AS D3_USERS,

COUNT(CASE WHEN _TABLE_SUFFIX >= '20170805' AND _TABLE_SUFFIX < '20170806' THEN event_count END) AS D4_USERS,

COUNT(CASE WHEN _TABLE_SUFFIX >= '20170806' AND _TABLE_SUFFIX < '20170807' THEN event_count END) AS D5_USERS,

COUNT(CASE WHEN _TABLE_SUFFIX >= '20170807' AND _TABLE_SUFFIX < '20170808' THEN event_count END) AS D6_USERS,

COUNT(CASE WHEN _TABLE_SUFFIX >= '20170808' AND _TABLE_SUFFIX < '20170809' THEN event_count END) AS D7_USERS

FROM `<<project-id>>.app_events_*`, UNNEST(event_dim) AS event

WHERE

_TABLE_SUFFIX >= '20170801' AND _TABLE_SUFFIX < '20170809' AND

user_dim.first_open_timestamp_micros BETWEEN 1501545600000000 AND 1501632000000000;

and

(2) Event Distinct Users' Count

SELECT

event.name as event_name,

COUNT(DISTINCT CASE WHEN _TABLE_SUFFIX >= '20170801' AND _TABLE_SUFFIX < '20170802' THEN user_dim.app_info.app_instance_id END) AS D0_USERS,

COUNT(DISTINCT CASE WHEN _TABLE_SUFFIX >= '20170802' AND _TABLE_SUFFIX < '20170803' THEN user_dim.app_info.app_instance_id END) AS D1_USERS,

COUNT(DISTINCT CASE WHEN _TABLE_SUFFIX >= '20170803' AND _TABLE_SUFFIX < '20170804' THEN user_dim.app_info.app_instance_id END) AS D2_USERS,

COUNT(DISTINCT CASE WHEN _TABLE_SUFFIX >= '20170804' AND _TABLE_SUFFIX < '20170805' THEN user_dim.app_info.app_instance_id END) AS D3_USERS,

COUNT(DISTINCT CASE WHEN _TABLE_SUFFIX >= '20170805' AND _TABLE_SUFFIX < '20170806' THEN user_dim.app_info.app_instance_id END) AS D4_USERS,

COUNT(DISTINCT CASE WHEN _TABLE_SUFFIX >= '20170806' AND _TABLE_SUFFIX < '20170807' THEN user_dim.app_info.app_instance_id END) AS D5_USERS,

COUNT(DISTINCT CASE WHEN _TABLE_SUFFIX >= '20170807' AND _TABLE_SUFFIX < '20170808' THEN user_dim.app_info.app_instance_id END) AS D6_USERS,

COUNT(DISTINCT CASE WHEN _TABLE_SUFFIX >= '20170808' AND _TABLE_SUFFIX < '20170809' THEN user_dim.app_info.app_instance_id END) AS D7_USERS

FROM `<<project-id>>.app_events_*`, UNNEST(event_dim) AS event

WHERE

_TABLE_SUFFIX >= '20170801' AND _TABLE_SUFFIX < '20170809'

AND user_dim.first_open_timestamp_micros BETWEEN 1501545600000000 AND 1501632000000000

GROUP BY 1;

Questions:

- Is there a more optimised way to write this? For small amount of columns it makes sense (D0-D7), but for D0-D30 I thought there might be a better way. Any suggestions are much appreciated !

Final Answer after Mikhail's feedback:

I combined both queries in one query and created a pivot table thereafter. Remember to select "Standard SQL" in BigQuery editor before execution.

SELECT

event.name AS event_name,

_TABLE_SUFFIX as day,

COUNT(1) as event_occurances,

COUNT(DISTINCT user_dim.app_info.app_instance_id) as event_unique_users

FROM `<<project-id>>.app_events_*`, UNNEST(event_dim) AS event

WHERE

_TABLE_SUFFIX >= '20170801' AND _TABLE_SUFFIX < '20170901' AND

user_dim.first_open_timestamp_micros BETWEEN 1501545600000000 AND 1501632000000000

GROUP BY event_name, day

ORDER BY event_name;

Appendix Notes:

Timestamp Conversion of 1 Aug 2017

- Epoch timestamp: 1501545600

- Timestamp in milliseconds: 1501545600000

Timestamp Conversion of 2 Aug 2017

- Epoch timestamp: 1501632000

- Timestamp in milliseconds: 1501632000000