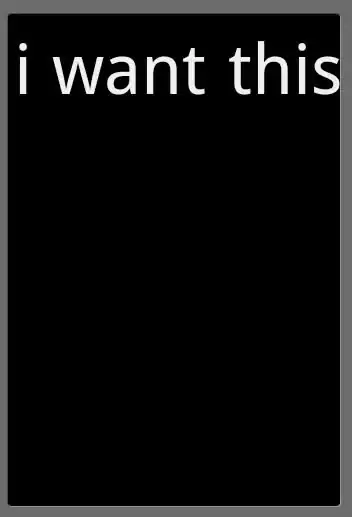

I have the following sample of handwriting taken with three different writing instruments:

Looking at the writing, I can tell that there is a distinct difference between the first two and the last one. My goal is to determine an approximation of the stroke thickness for each letter, allowing me to group them based on being thin or thick.

So far, I have tried looking into stroke width transform, but I have struggled to translate it to my example.

I am able to preprocess the image such that I am just left with just the contours of the test in question. For example, here is thick from the last line: