What I'm trying to do is to detect 2D objects in an image. The differences between this and typical eyes/faces detection however is that the 2D objects I'm trying to detect are not transformed in any way except scaled, rotated or partially blocked (ex. a certain icon in a computer screenshot.)

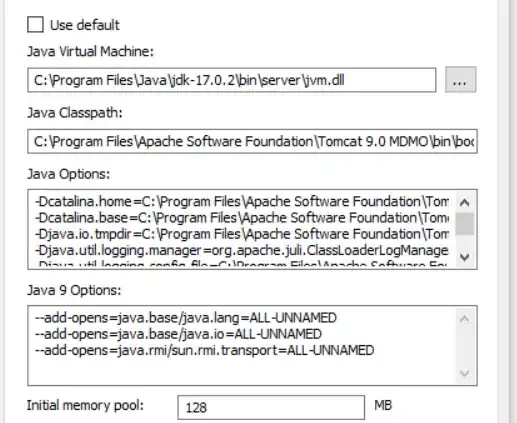

I have tried to train a Haar cascade with one positive image (the object) and 600 negative image (randomly selected from a background image), however the result was not satisfactory. In particular, opencv_createsamples create weirdly scaled samples (parts are cut off, some are scaled too big). These were the parameters I used:

# Create samples

opencv_createsamples -img positive_images/icon.png -bg negatives.txt\

-num 1500 -bgcolor 0 -bgthresh 0 -maxxangle 0 -maxyangle 0 -maxzangle 6.28\

-maxidev 40 -vec pos.vec -maxscale 2 -w 25 -h 25

# Train cascade

opencv_traincascade -data classifier -vec pos.vec -bg negatives.txt\

-numStages 20 -minHitRate 0.999 -maxFalseAlarmRate 0.5 -numPos 1000\

-numNeg 600 -w 25 -h 25 -mode ALL -precalcValBufSize 4096\

-precalcIdxBufSize 4096

Since the task seems to be simpler than a general object detection task, I'm wondering if there are better ways of doing this. If not, what are some possible ways to get a better result?

Edit, here are some sample images that I'm using:

Sample backgrounds (2 of 600):

Some interesting things is that I tried directly using both opencv_createsamples (above) and the wrapper provided here (direct link). With opencv_createsamples the result was not satisfactory. It was not detecting the sample image and classifying other unrelated objects as positive. With the wrapper however the result was a bit better but it only detects the object when there is a white background. I am not sure why using the wrapper would have that effect, but there might be a chance that my parameters, or the way I'm using, was incorrect.

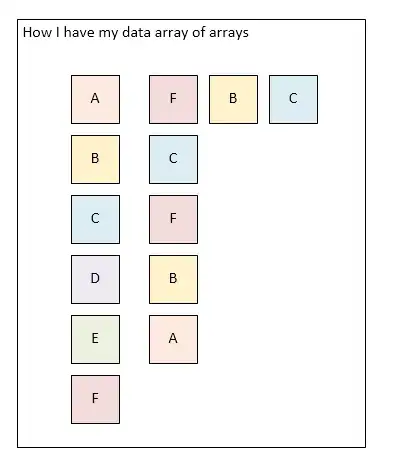

Directory structure:

- positive_images/

- icon.png

- negative_images/

- 600 images

- classifier/

- positives.txt

- negatives.txt