First of all you have to get music_tagger_cnn.py and put it in the project path. After that you can build your model:

from music_tagger_cnn import *

input_tensor = Input(shape=(1, 18, 119))

model =MusicTaggerCNN(input_tensor=input_tensor, include_top=False, weights='msd')

You can change the input tensor by the dimension you want...

I usually use Theano dim ordering but Tensorflow as backend, so that's why:

from keras import backend as K

K.set_image_dim_ordering('th')

Using Theano dim ordering you hav to take into account that the order of the sample's dimensions have to be changed

X_train = X_train.transpose(0, 3, 2, 1)

X_val = X_val.transpose(0, 3, 2, 1)

After that you have to freeze these layers that you don't want to be updated

for layer in model.layers:

layer.trainable = False

Now you can set your own output, for example:

last_layer = model.get_layer('pool3').output

out = Flatten()(last_layer)

out = Dense(128, activation='relu', name='fc2')(out)

out = Dropout(0.5)(out)

out = Dense(n_classes, activation='softmax', name='fc3')(out)

model = Model(input=model.input, output=out)

After that you have to be able to train it just doing:

sgd = SGD(lr=0.01, momentum=0, decay=0.002, nesterov=True)

model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])

history = model.fit(X_train, labels_train,

validation_data=(X_val, labels_val), nb_epoch=100, batch_size=5)

Note that labels should be in one-hot encoding

I hope it will help!!

Update: Posting code so I can get help debugging these lines and prevent a crash.

input_tensor = Input(shape=(3, 640, 480))

model = MusicTaggerCNN(input_tensor=input_tensor, include_top=False, weights='msd')

for layer in model.layers:

layer.trainable = False

last_layer = model.get_layer('pool3').output

out = Flatten()(last_layer)

out = Dense(128, activation='relu', name='fc2')(out)

out = Dropout(0.5)(out)

out = Dense(n_classes, activation='softmax', name='fc3')(out)

model = Model(input=model.input, output=out)

sgd = SGD(lr=0.01, momentum=0, decay=0.002, nesterov=True)

model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])

history = model.fit(X_train, labels_train,

validation_data=(X_test, Y_test), nb_epoch=100, batch_size=5)

EDIT # 2

# -*- coding: utf-8 -*-

'''MusicTaggerCNN model for Keras.

# Reference:

- [Automatic tagging using deep convolutional neural networks](https://arxiv.org/abs/1606.00298)

- [Music-auto_tagging-keras](https://github.com/keunwoochoi/music-auto_tagging-keras)

'''

from __future__ import print_function

from __future__ import absolute_import

from keras import backend as K

from keras.layers import Input, Dense

from keras.models import Model

from keras.layers import Dense, Dropout, Flatten

from keras.layers.convolutional import Convolution2D

from keras.layers.convolutional import MaxPooling2D, ZeroPadding2D

from keras.layers.normalization import BatchNormalization

from keras.layers.advanced_activations import ELU

from keras.utils.data_utils import get_file

from keras.layers import Input, Dense

TH_WEIGHTS_PATH = 'https://github.com/keunwoochoi/music-auto_tagging-keras/blob/master/data/music_tagger_cnn_weights_theano.h5'

TF_WEIGHTS_PATH = 'https://github.com/keunwoochoi/music-auto_tagging-keras/blob/master/data/music_tagger_cnn_weights_tensorflow.h5'

def MusicTaggerCNN(weights='msd', input_tensor=None,

include_top=True):

'''Instantiate the MusicTaggerCNN architecture,

optionally loading weights pre-trained

on Million Song Dataset. Note that when using TensorFlow,

for best performance you should set

`image_dim_ordering="tf"` in your Keras config

at ~/.keras/keras.json.

The model and the weights are compatible with both

TensorFlow and Theano. The dimension ordering

convention used by the model is the one

specified in your Keras config file.

For preparing mel-spectrogram input, see

`audio_conv_utils.py` in [applications](https://github.com/fchollet/keras/tree/master/keras/applications).

You will need to install [Librosa](http://librosa.github.io/librosa/)

to use it.

# Arguments

weights: one of `None` (random initialization)

or "msd" (pre-training on ImageNet).

input_tensor: optional Keras tensor (i.e. output of `layers.Input()`)

to use as image input for the model.

include_top: whether to include the 1 fully-connected

layer (output layer) at the top of the network.

If False, the network outputs 256-dim features.

# Returns

A Keras model instance.

'''

if weights not in {'msd', None}:

raise ValueError('The `weights` argument should be either '

'`None` (random initialization) or `msd` '

'(pre-training on Million Song Dataset).')

# Determine proper input shape

if K.image_dim_ordering() == 'th':

input_shape = (3, 640, 480)

else:

input_shape = (3, 640, 480)

if input_tensor is None:

melgram_input = Input(shape=input_shape)

else:

if not K.is_keras_tensor(input_tensor):

melgram_input = Input(tensor=input_tensor, shape=input_shape)

else:

melgram_input = input_tensor

# Determine input axis

if K.image_dim_ordering() == 'th':

channel_axis = 1

freq_axis = 2

time_axis = 3

else:

channel_axis = 3

freq_axis = 1

time_axis = 2

# Input block

x = BatchNormalization(axis=freq_axis, name='bn_0_freq')(melgram_input)

# Conv block 1

x = Convolution2D(64, 3, 3, border_mode='same', name='conv1')(x)

x = BatchNormalization(axis=channel_axis, mode=0, name='bn1')(x)

x = ELU()(x)

x = MaxPooling2D(pool_size=(2, 4), name='pool1')(x)

# Conv block 2

x = Convolution2D(128, 3, 3, border_mode='same', name='conv2')(x)

x = BatchNormalization(axis=channel_axis, mode=0, name='bn2')(x)

x = ELU()(x)

x = MaxPooling2D(pool_size=(2, 4), name='pool2')(x)

# Conv block 3

x = Convolution2D(128, 3, 3, border_mode='same', name='conv3')(x)

x = BatchNormalization(axis=channel_axis, mode=0, name='bn3')(x)

x = ELU()(x)

x = MaxPooling2D(pool_size=(2, 4), name='pool3')(x)

# Output

x = Flatten()(x)

if include_top:

x = Dense(50, activation='sigmoid', name='output')(x)

# Create model

model = Model(melgram_input, x)

if weights is None:

return model

else:

# Load input

if K.image_dim_ordering() == 'tf':

raise RuntimeError("Please set image_dim_ordering == 'th'."

"You can set it at ~/.keras/keras.json")

model.load_weights('data/music_tagger_cnn_weights_%s.h5' % K._BACKEND,

by_name=True)

return model

EDIT #3

I tried the keras example for using the MusicTaggerCRNN as a feature extractor of the melgrams. Then i trained a simple NN with 2 Dense layers and a binary output. The samples taken in my example don't apply in your case but it's also a binary classifier

I used keras==1.2.2 and tensorflow-gpu==1.0.0 and works for me.

Here's the code:

from keras.applications.music_tagger_crnn import MusicTaggerCRNN

from keras.applications.music_tagger_crnn import preprocess_input, decode_predictions

import numpy as np

from keras.layers import Input, Dense

from keras.models import Model

from keras.layers import Dense, Dropout, Flatten

from keras.optimizers import SGD

model = MusicTaggerCRNN(weights='msd', include_top=False)

#Samples simulation

audio_paths_train = ['data/genres/blues/blues.00000.au','data/genres/classical/classical.00000.au','data/genres/classical/classical.00002.au', 'data/genres/blues/blues.00003.au']

audio_paths_test = ['data/genres/blues/blues.00001.au', 'data/genres/classical/classical.00001.au', 'data/genres/blues/blues.00002.au', 'data/genres/classical/classical.00003.au']

labels_train = [0,1,1,0]

labels_test = [0, 1, 0, 1]

melgrams_train = [preprocess_input(audio_path) for audio_path in audio_paths_train]

melgrams_test = [preprocess_input(audio_path) for audio_path in audio_paths_test]

feats_train = [model.predict(np.expand_dims(melgram, axis=0)) for melgram in melgrams_train]

feats_test = [model.predict(np.expand_dims(melgram, axis=0)) for melgram in melgrams_test]

feats_train = np.array(feats_train)

feats_test = np.array(feats_test)

_input = Input(shape=(1,32))

x = Flatten(name='flatten')(_input)

x = Dense(128, activation='relu', name='fc6')(x)

x = Dense(64, activation='relu', name='fc7')(x)

x = Dense(1, activation='softmax', name='fc8')(x)

class_model = Model(_input, x)

sgd = SGD(lr=0.01, momentum=0, decay=0.02, nesterov=True)

class_model.compile(loss='binary_crossentropy', optimizer=sgd, metrics=['accuracy'])

history = class_model.fit(feats_train, labels_train, validation_data=(feats_test, labels_test), nb_epoch=100, batch_size=5, class_weight='auto')

print(history.history['acc'])

# Final evaluation of the model

scores = class_model.evaluate(feats_test, labels_test, verbose=0)

print("Accuracy: %.2f%%" % (scores[1] * 100))

Class # 2

Class # 2

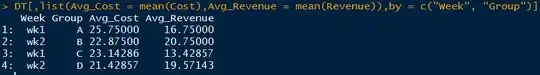

Here is the graph of accuracy vs epoch:

Here is the graph of accuracy vs epoch: