I have the following sklearn clusters obtained using affinity propagation.

import sklearn.cluster

import numpy as np

sims = np.array([[0, 17, 10, 32, 32], [18, 0, 6, 20, 15], [10, 8, 0, 20, 21], [30, 16, 20, 0, 17], [30, 15, 21, 17, 0]])

affprop = sklearn.cluster.AffinityPropagation(affinity="precomputed", damping=0.5)

affprop.fit(sims)

cluster_centers_indices = affprop.cluster_centers_indices_

labels = affprop.labels_

#number of clusters

n_clusters_ = len(cluster_centers_indices)

Now I want to plot the output of the clusters. I am new to sklearn. Please suggest me a suitable approach to plot the clusters in python. Is it possible to do this with pandas dataframes?

EDIT:

I used the code in sklearn directly as follows as pointed by @MohammedKashif.

import sklearn.cluster

import numpy as np

sims = np.array([[0, 17, 10, 32, 32], [18, 0, 6, 20, 15], [10, 8, 0, 20, 21], [30, 16, 20, 0, 17], [30, 15, 21, 17, 0]])

affprop = sklearn.cluster.AffinityPropagation(affinity="precomputed", damping=0.5)

affprop.fit(sims)

cluster_centers_indices = affprop.cluster_centers_indices_

print(cluster_centers_indices)

labels = affprop.labels_

n_clusters_ = len(cluster_centers_indices)

print(n_clusters_)

import matplotlib.pyplot as plt

from itertools import cycle

plt.close('all')

plt.figure(1)

plt.clf()

colors = cycle('bgrcmykbgrcmykbgrcmykbgrcmyk')

for k, col in zip(range(n_clusters_), colors):

class_members = labels == k

cluster_center = sims[cluster_centers_indices[k]]

plt.plot(sims[class_members, 0], sims[class_members, 1], col + '.')

plt.plot(cluster_center[0], cluster_center[1], 'o', markerfacecolor=col,

markeredgecolor='k', markersize=14)

for x in sims[class_members]:

plt.plot([cluster_center[0], x[0]], [cluster_center[1], x[1]], col)

plt.title('Estimated number of clusters: %d' % n_clusters_)

plt.show()

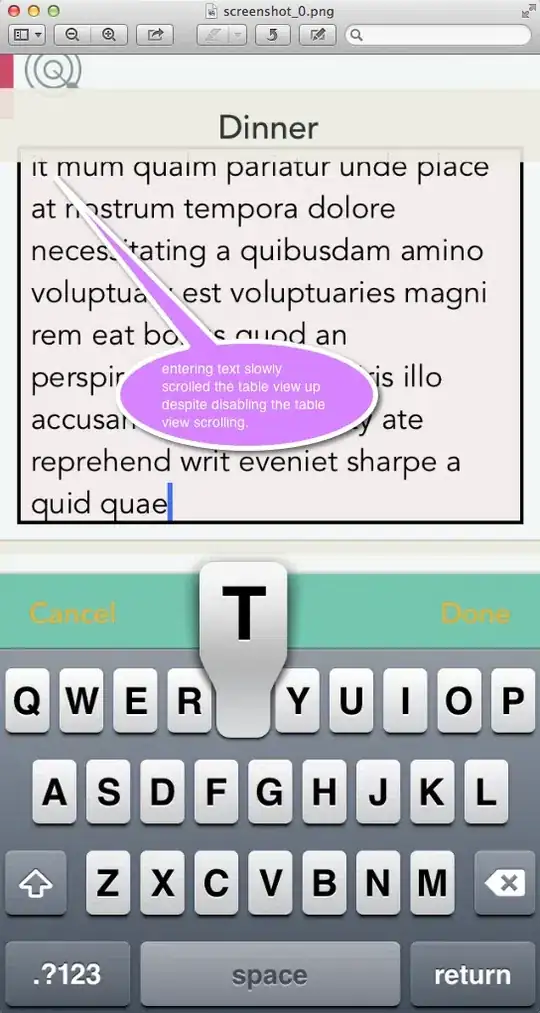

However, the output I get is bit weird as follows (The second cluster point (green) is on the blue line. Hence, I don't think it should be clustered as a separate one and should also be in the blue cluster). Please let me know if I have made any mistakes in the code.

Edit 2

As pointed by σηγ I added:

se = SpectralEmbedding(n_components=2, affinity='precomputed')

X = se.fit_transform(sims)

print(X)

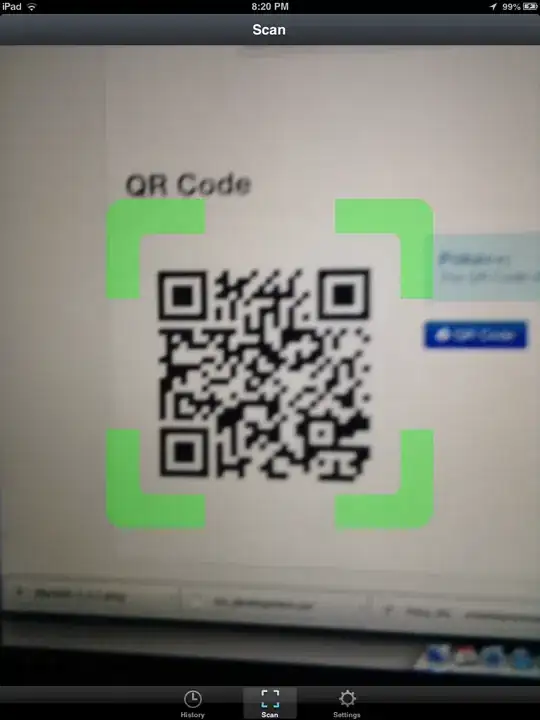

However, for the array np.array([[0, 17, 10, 32, 32], [0, 17, 10, 32, 32], [0, 17, 10, 32, 33], [0, 17, 10, 32, 32], [0, 17, 10, 32, 32]]) it gave me 3 points as shown below. That confuses me because all the 5 arrays represents one point.

Please help me.