I am trying to build an application on spark using Deeplearning4j library. I have a cluster where i am going to run my jar(built using intelliJ) using spark-submit command. Here's my code

package Com.Spark.Examples

import scala.collection.mutable.ListBuffer

import org.apache.spark.SparkConf

import org.apache.spark.SparkContext

import org.canova.api.records.reader.RecordReader

import org.canova.api.records.reader.impl.CSVRecordReader

import org.deeplearning4j.nn.api.OptimizationAlgorithm

import org.deeplearning4j.nn.conf.MultiLayerConfiguration

import org.deeplearning4j.nn.conf.NeuralNetConfiguration

import org.deeplearning4j.nn.conf.layers.DenseLayer

import org.deeplearning4j.nn.conf.layers.OutputLayer

import org.deeplearning4j.nn.multilayer.MultiLayerNetwork

import org.deeplearning4j.nn.weights.WeightInit

import org.deeplearning4j.spark.impl.multilayer.SparkDl4jMultiLayer

import org.nd4j.linalg.lossfunctions.LossFunctions

object FeedForwardNetworkWithSpark {

def main(args:Array[String]): Unit ={

val recordReader:RecordReader = new CSVRecordReader(0,",")

val conf = new SparkConf()

.setAppName("FeedForwardNetwork-Iris")

val sc = new SparkContext(conf)

val numInputs:Int = 4

val outputNum = 3

val iterations =1

val multiLayerConfig:MultiLayerConfiguration = new NeuralNetConfiguration.Builder()

.seed(12345)

.iterations(iterations)

.optimizationAlgo(OptimizationAlgorithm.STOCHASTIC_GRADIENT_DESCENT)

.learningRate(1e-1)

.l1(0.01).regularization(true).l2(1e-3)

.list(3)

.layer(0, new DenseLayer.Builder().nIn(numInputs).nOut(3).activation("tanh").weightInit(WeightInit.XAVIER).build())

.layer(1, new DenseLayer.Builder().nIn(3).nOut(2).activation("tanh").weightInit(WeightInit.XAVIER).build())

.layer(2, new OutputLayer.Builder(LossFunctions.LossFunction.MCXENT).weightInit(WeightInit.XAVIER)

.activation("softmax")

.nIn(2).nOut(outputNum).build())

.backprop(true).pretrain(false)

.build

val network:MultiLayerNetwork = new MultiLayerNetwork(multiLayerConfig)

network.init

network.setUpdater(null)

val sparkNetwork:SparkDl4jMultiLayer = new

SparkDl4jMultiLayer(sc,network)

val nEpochs:Int = 6

val listBuffer = new ListBuffer[Array[Float]]()

(0 until nEpochs).foreach{i => val net:MultiLayerNetwork = sparkNetwork.fit("/user/iris.txt",4,recordReader)

listBuffer +=(net.params.data.asFloat().clone())

}

println("Parameters vs. iteration Output: ")

(0 until listBuffer.size).foreach{i =>

println(i+"\t"+listBuffer(i).mkString)}

}

}

Here is my build.sbt file

name := "HWApp"

version := "0.1"

scalaVersion := "2.12.3"

libraryDependencies += "org.apache.spark" % "spark-core_2.10" % "1.6.0" % "provided"

libraryDependencies += "org.apache.spark" % "spark-mllib_2.10" % "1.6.0" % "provided"

libraryDependencies += "org.deeplearning4j" % "deeplearning4j-nlp" % "0.4-rc3.8"

libraryDependencies += "org.deeplearning4j" % "dl4j-spark" % "0.4-rc3.8"

libraryDependencies += "org.deeplearning4j" % "deeplearning4j-core" % "0.4-rc3.8"

libraryDependencies += "org.nd4j" % "nd4j-x86" % "0.4-rc3.8" % "test"

libraryDependencies += "org.nd4j" % "nd4j-api" % "0.4-rc3.8"

libraryDependencies += "org.nd4j" % "nd4j-jcublas-7.0" % "0.4-rc3.8"

libraryDependencies += "org.nd4j" % "canova-api" % "0.0.0.14"

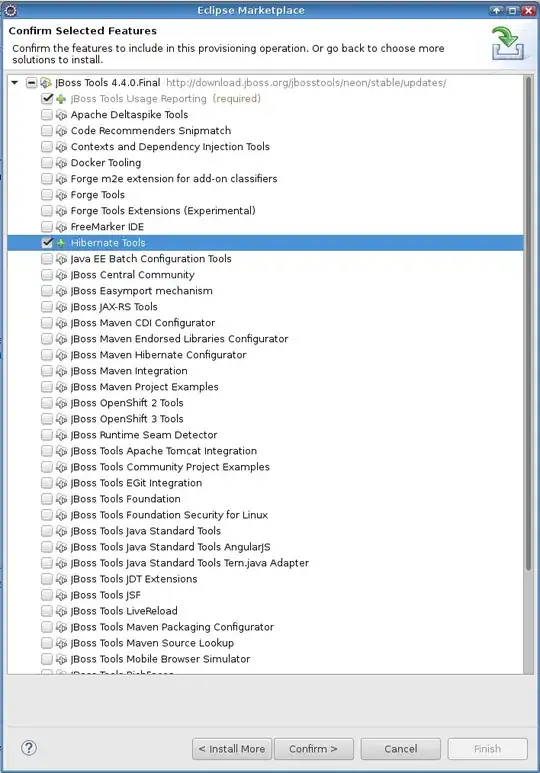

when i see my code in intelliJ, it does not show any error but when i execute the application on cluster: i got something like this:

I don't know what it wants from me. Even a little help will be appreciated. Thanks.