I am running the basic NetworkWordCount program on yarn cluster through spark-shell. Here is my code snippet -

import org.apache.spark.streaming.{Seconds, StreamingContext}

import org.apache.spark.storage.StorageLevel

val ssc = new StreamingContext(sc, Seconds(60))

val lines = ssc.socketTextStream("172.26.32.34", 9999, StorageLevel.MEMORY_ONLY)

val words = lines.flatMap(_.split(" "))

val wordCounts = words.map(x => (x, 1)).reduceByKey(_ + _)

wordCounts.print()

ssc.start()

ssc.awaitTermination()

The output on console and stats on Streaming tab are also as expected. But when I look at jobs tab, per 1-minute batch interval two jobs get triggered, shouldn't it be one job per interval? Screenshot below -

Now when I look at completed batches on Streaming UI, I see exactly one batch per minute.Screenshot below -

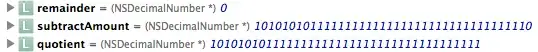

Am I missing something? Also, I noticed that the start job also has two states with the same name that spawns a different number of tasks as seen in image below, what exactly is happening here?