I'm trying to find the shortest path between two points, (0,0) and (1000,-100). The path is to be defined by a 7th order polynomial function:

p(x) = a0 + a1*x + a2*x^2 + ... + a7*x^7

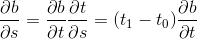

To do so I tried to minimize the function that calculates the total path length from the polynomial function:

length = int from 0 to 1000 of { sqrt(1 + (dp(x)/dx)^2 ) }

Obviously the correct solution will be a linear line, however later on I want to add constraints to the problem. This one was supposed to be a first approach.

The code I implemented was:

import numpy as np

import matplotlib.pyplot as plt

import math

import sys

import scipy

def path_tracer(a,x):

return a[0] + a[1]*x + a[2]*x**2 + a[3]*x**3 + a[4]*x**4 + a[5]*x**5 + a[6]*x**6 + a[7]*x**7

def lof(a):

upper_lim = a[8]

L = lambda x: np.sqrt(1 + (a[1] + 2*a[2]*x + 3*a[3]*x**2 + 4*a[4]*x**3 + 5*a[5]*x**4 + 6*a[6]*x**5 + 7*a[7]*x**6)**2)

length_of_path = scipy.integrate.quad(L,0,upper_lim)

return length_of_path[0]

a = np.array([-4E-11, -.4146,.0003,-7e-8,0,0,0,0,1000]) # [polynomial parameters, x end point]

xx = np.linspace(0,1200,1200)

y = [path_tracer(a,x) for x in xx]

cons = ({'type': 'eq', 'fun': lambda x:path_tracer(a,a[8])+50})

c = scipy.optimize.minimize(lof, a, constraints = cons)

print(c)

When I ran it however the minimization routine fails and returns the initial parameters unchanged. The output is:

fun: 1022.9651540965604

jac: array([ 0.00000000e+00, -1.78130722e+02, -1.17327499e+05,

-7.62458172e+07, 9.42803815e+11, 9.99924786e+14,

9.99999921e+17, 1.00000000e+21, 1.00029755e+00])

message: 'Singular matrix C in LSQ subproblem'

nfev: 11

nit: 1

njev: 1

status: 6

success: False

x: array([ -4.00000000e-11, -4.14600000e-01, 3.00000000e-04,

-7.00000000e-08, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 0.00000000e+00, 1.00000000e+03])

Am I doing something wrong or is the routine just not appropriate to solve this kind of problems? If so, is there an alternative in Python?