I've been working on a function to handle a large Corpus. In it I use the doparallel package. Everything was working fine on 50 - 100k documents. I tested on 1M documents and received the above error.

However, when I go back down to a size of corpus I was working on previously, I still get the same error. I even tried going as low as 1k documents. The error is generated as soon as I hit enter when calling the function in the console.

Though I have 15 cores I tested this by going as low as just two cores - same issue.

I also tried restarting my session and clearing the environment with rm(list = ls())

Code:

clean_corpus <- function(corpus, n = 1000) { # n is length of each peice in parallel processing

# split the corpus into pieces for looping to get around memory issues with transformation

nr <- length(corpus)

pieces <- split(corpus, rep(1:ceiling(nr/n), each=n, length.out=nr))

lenp <- length(pieces)

rm(corpus) # save memory

# save pieces to rds files since not enough RAM

tmpfile <- tempfile()

for (i in seq_len(lenp)) {

saveRDS(pieces[[i]],

paste0(tmpfile, i, ".rds"))

}

rm(pieces) # save memory

# doparallel

registerDoParallel(cores = 14)

pieces <- foreach(i = seq_len(lenp)) %dopar% {

# update spelling

piece <- readRDS(paste0(tmpfile, i, ".rds"))

# spelling update based on lut

piece <- tm_map(piece, function(i) stringi_spelling_update(i, spellingdoc))

# regular transformations

piece <- tm_map(piece, removeNumbers)

piece <- tm_map(piece, content_transformer(removePunctuation), preserve_intra_word_dashes = T)

piece <- tm_map(piece, content_transformer(function(x, ...)

qdap::rm_stopwords(x, stopwords = tm::stopwords("english"), separate = F)))

saveRDS(piece, paste0(tmpfile, i, ".rds"))

return(1) # hack to get dopar to forget the piece to save memory since now saved to rds

}

# combine the pieces back into one corpus

corpus <- list()

corpus <- foreach(i = seq_len(lenp)) %do% {

corpus[[i]] <- readRDS(paste0(tmpfile, i, ".rds"))

}

corpus <- do.call(function(...) c(..., recursive = TRUE), corpus)

return(corpus)

} # end clean_corpus function

Then when I run it, even on a small corpus:

> mini_cleancorp <- clean_corpus(mini_corpus, n = 1000) # mini_corpus is a 10k corpus

Show Traceback

Rerun with Debug

Error in mcfork() :

unable to fork, possible reason: Cannot allocate memory

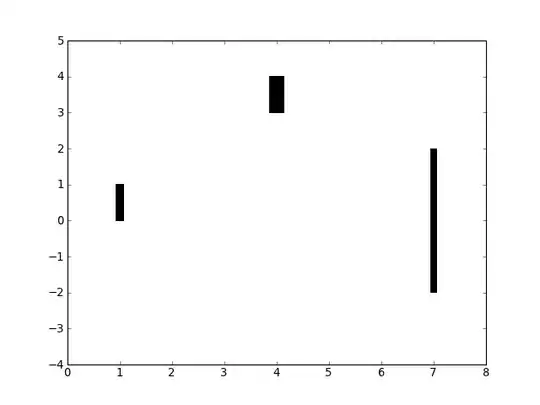

Here are some screen shots of top in the terminal just before I try to run the function.