I'm trying to solve numerically a parabolic type of Partial differential equation(PDE):

u't=u''xx-u(1-u)(0.3-u)

with Neumann boundary conditions and a step like function as an initial condition.

Expected plot result is here

It may be a bit misleading: red - is an initial step-like function and smooth lines are different iteration in time after (like t=50,100, 150..))

The idea is based on the method of lines: make the space (x) discrete, use finite difference methods (second order is written by the symmetric-centered approximation), standard Python scipy odeint(which can use implicit methods if I'm not mistaken)and then to solve the obtained set of ODEs. I think this is pretty standard way of how to deal with such ordinary PDEs(correct me if I'm wrong).

Below are code snippets I'm running. In a nutshell, I want to represent the above continuous equation in a matrix based form: [u]'t=A*[u]+f([u]) where A is a matrix for second order symmetric-cantered approximation of u''xx(after multiplying by [u])

Unfortunately, there is almost nothing qualitatively significant happening with initial conditions after running the code, so I'm confused whether there is a problem with my script or in an approach itself.

Defining the right side u(1-u)(0.3-u):

def f(u, h):

puff = []

for i in u:

puff.append(i*(1. - i)*(0.3 - i))

puff[0] = (h/2.)*puff[0] #this is to include

puff[len(puff)-1] = (h/2.)*puff[len(puff)-1]

return puff

Defining spatial resolution:

h = 10.

x = np.linspace(0., 100., num=h)

This is to define second derivative by the means of finite difference(A*[u]):

diag = [-2]*(len(x))

diag[0] = -h

diag[len(x)-1] = h**2

diag_up = [1]*(len(x)-1)

diag_up[0] = h

diag_down = [1]*(len(x)-1)

diag_down[len(x)-2] = 0

diagonals = [diag, diag_up, diag_down]

A = diags(diagonals, [0, 1, -1]).toarray()

A = A/h**2

Defining step-like initial condition:

init_cond = [0]*(len(x))

for i in range(int(round(0.2*len(init_cond)))):

init_cond[i] = 1

Solving set of ODEs with a time frame defined.

t = np.linspace(0, 100, 200)

def pde(u, t, A, h):

dudt = A.dot(u) - f(u, h)

return dudt

sol = odeint(pde, init_cond, t, args=(A, h))

print(sol[len(sol)-1])

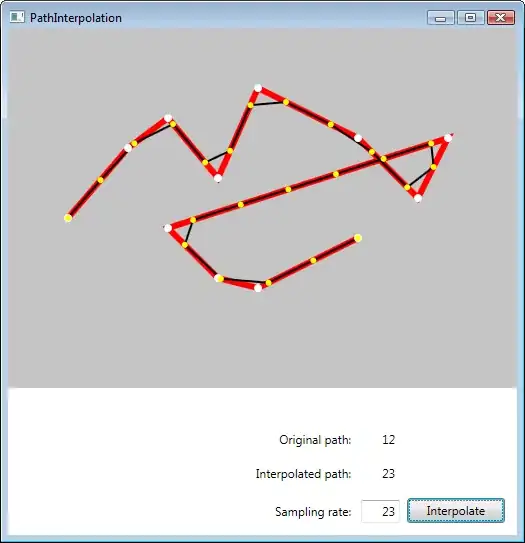

The code may be flawed as I'm rather new to Python. If there is anything I can improve, please let me know. The final result is here

Initial conditions did not change at all. Can anyone give me a hint where I could make a mistake? Thank you in advance!