I try to implement a visualization for optimization algorithms in TensorFlow.

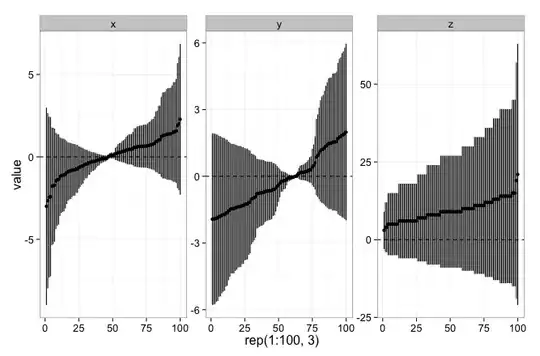

Therefore I started with the Beale's function

The global minimum is at

A plot of the Beale's function does look like this

I would like to start at the point f(x=3.0, y=4.0)

How do I implement this in TensorFlow with optimization algorithms?

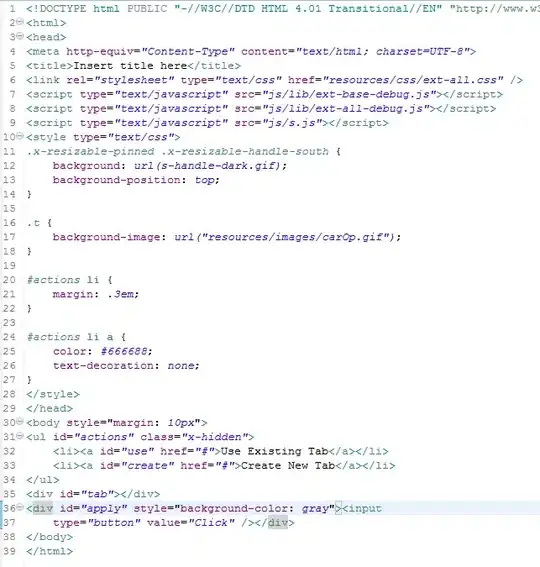

My first try looks like this

import tensorflow as tf

# Beale's function

x = tf.Variable(3.0, trainable=True)

y = tf.Variable(4.0, trainable=True)

f = tf.add_n([tf.square(tf.add(tf.subtract(1.5, x), tf.multiply(x, y))),

tf.square(tf.add(tf.subtract(2.25, x), tf.multiply(x, tf.square(y)))),

tf.square(tf.add(tf.subtract(2.625, x), tf.multiply(x, tf.pow(y, 3))))])

Y = [3, 0.5]

loss = f

opt = tf.train.GradientDescentOptimizer(0.1).minimize(loss)

sess = tf.Session()

sess.run(tf.global_variables_initializer())

for i in range(100):

print(sess.run([x, y, loss]))

sess.run(opt)

Obviously this doesn't work. I guess I have to define a correct loss, but how? To clearify: My problem is that I don't understand how TensorFlow works and I don't know much python (coming from Java, C, C++, Delphi, ...). My question is not on how this works and what the best optimization methods are, it's only about how to implement this in a correct way.