Altering a column from nullable to not nullable causes the new column to be created, the operation is fully logged, and also causes row versions to be produced if you use RCSI.

You can check this topic for more info: Why does ALTER COLUMN to NOT NULL cause massive log file growth?

Reguarding

I could understand if there was another connection referencing the old

(un-ALTERed) rows in my.Table but this definitely wasn't the case.

You misunderstand how RSCI works.

As soon as the transition to RCSI is completed, every update will generate row versions independent of the fact there are or there aren't othet transactions that are interested in those rows

When either the READ_COMMITTED_SNAPSHOT or ALLOW_SNAPSHOT_ISOLATION

database options are ON, logical copies (versions) are maintained for

all data modifications performed in the database. Every time a row is

modified by a specific transaction, the instance of the Database

Engine stores a version of the previously committed image of the row

in tempdb. Each version is marked with the transaction sequence number

of the transaction that made the change. The versions of modified rows

are chained using a link list. The newest row value is always stored

in the current database and chained to the versioned rows stored in

tempdb.

Understanding Row Versioning-Based Isolation Levels

Or more clearly it's written here:

When either the READ_COMMITTED_SNAPSHOT or ALLOW_SNAPSHOT_ISOLATION

database options are ON, update and delete transactions for a

particular database must maintain row versions even when there are no

transactions using a row versioning-based isolation level.

Constructing a consistent snapshot of data using row versions involves

system resources (CPU and memory), and potentially generates I/O

activity. Because the record versions are stored in tempdb,

performance is better and the number of issued I/Os is lower when more

tempdb pages can be stored in memory for row versioning.

As you imagine, ALTER TABLE operates within 1 transaction, so that row versions are alive for all the duration of this transaction (they could live even more, until a statement interested in them was executing, but since no one was interested in, the minimum "life expectation" is the duration of the owning transaction)

...................................................................................

UPDATED:

I tried to reproduce the issue on SQL Server 2012:

I set tempdb autogrowth to 0 (tempdata set to 10Mb, templog to 1Mb) and created a new database of 20Mb data file + 10 Mb log file, simple recovery model, and created a table dbo.Nums filled up with 1000000 integers (bigint, null) this way:

select top 1000000 row_number() over(order by 1/0) as n

into dbo.Nums

from sys.all_columns c1 cross join sys.all_columns c2;

Then I did a checkpoint and alter a column from null to not null:

alter table dbo.nums alter column n bigint not null

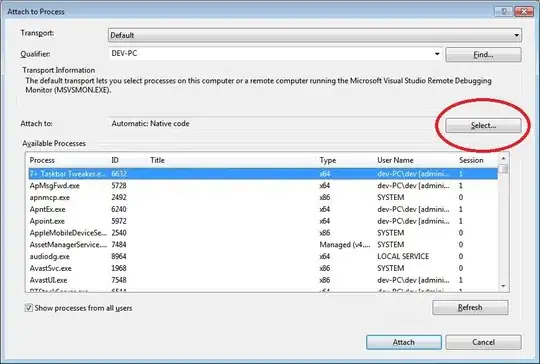

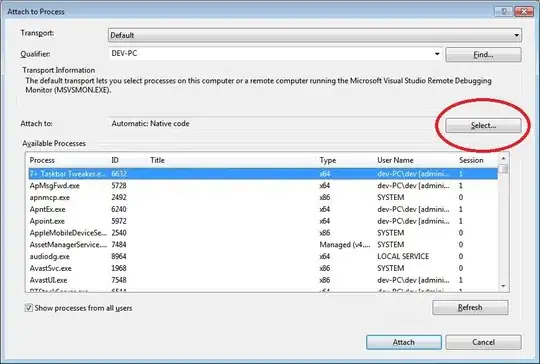

This took 0 seconds, my table size was about 16Mb prior to this action and it remains about 16Mb, no log file growth, and what went to log file I'll show in the picture.

Then I dropped the table, recreate it and altered my db:

alter database rcsi set read_committed_snapshot on;

And did exactly the same thing: checkpoint + alter table + select from sys.fn_dblog()

I had to wait for 5 minutes, but tempdb gives no error.

There was PREEMPTIVE_OS_GETDISKFREESPACE as a wait type during the statement execution, but guess what it was.

It was not tempdb (that was only 10Mb + 1Mb and remains the same as I limited it size), it was the LOG FILE of my user database, that just for changing the data type from nullable to not nullable UNDER RCSI, has grown to 1Gb (!!!!)

1Gb of log for changing nullability of 1 column of the table that was 16Mb only

And all the time I was waiting not for tempdb growth but for zering out 1 Gb for my db log file.

I attach the picture of what went to log during the same operation under RC and RCSI, so you can see that producing row versions cost much more to user database than to tempdb, so I think the hours you was waiting were spent to log row versions to your database log file (they are not logged in tempdb at all)

Becides COPY_VERSION_INFO, there were many row modifications that may not be your case: my rows have got a new 14-byte row version tag so there were too many changes made to that table because I have changed Isolation Level just before changing nullability, but the main impact in my case was produced by user db log file growth and not by tempdb that did not grow at all.

P.S. Maybe you'd better move this question to dbaexchange?