I'm kinda at a lost with some performance with OpenCL on an AMD GPU (Hawaii core or Radeon R9 390).

The operation is as follows:

- send memory object #1 to GPU

- execute kernel #1

- send memory object #2 to GPU

- execute kernel #2

- send memory object #3 to GPU

- execute kernel #3

dependency is:

- kernel #1 on memory object #1

- kernel #2 on memory object #2 as well as output memory of kernel #1

- kernel #3 on memory object #3 as well as output memory of kernels #1 & #2

Memory transmission and Kernel execute are performed in two separate command queues. Command dependency is done by GPU events as defined in OpenCL.

The whole operation is now looped just for performance analysis with the same input data.

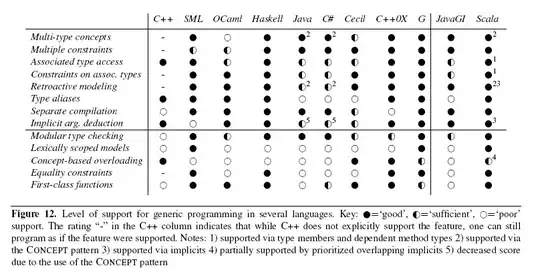

As you can see in the timeline, the host is waiting a very long time on the GPU to finish with clWaitForEvents() while the GPU idles most of the time. You can also see the repeated operation. For convenience I also provide the list of all issued OpenCL commands.

My questions now are:

Why is the GPU idling so much? In my head I can easily push all "blue" items together and start the operation right away. Memory transfer is 6 GB/s which is the expected rate.

Why are the kernels executed so late? Why is there a gap between kernel #2 and kernel #3 execution?

Why are memory transfer and kernel not executed in parallel? I use 2 command queues, with only 1 queue it is even worse with performance.

Just by pushing all commands together in my head (keeping dependency of course, so 1st green must start after 1st blue) I can triple performance. I don't know why the GPU is so sluggish. Has anyone some insight?

Some number crunching

- Memory Transfer #1 is 253 µs

- Memory Transfer #2 is 120 µs

Memory Transfer #3 is 143 µs -- which is always too high for unknown reasons, it should be about 1/2 of #2 or in range 70-80 µs

Kernel #1 is 74 µs

- Kernel #2 is 95 µs

- Kernel #3 is 107 µs

as Kernel #1 is faster than Memory Transfer #2 and Kernel #2 is faster than Memory Transfer #3 overall time should be:

- 253 µs + 120 µs + 143 µs + 107 µs = 623 µs

but clWaitForEvents is

- 1758 µs -- or about 3x as much

Yes, there are some losses and I'm fine with like 10% (60 µs), but 300% is too much.