I've got an image with regions of interest. I would like to apply random transformations to this image, while keeping the regions of interest correct.

My code is taking a list of boxes in this format [x_min, y_min, x_max, y_max].

It then converts the boxes into a list of vertices [up_left, up_right, down_right, down_left] for every box. This is a list of vectors. So I can apply the transformation to the vectors.

The next step is looking for the new [x_min, y_min, x_max, y_max] in the list of transformed vertices.

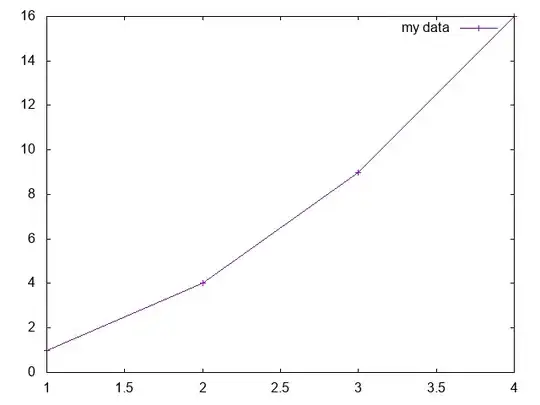

My first application was rotations, they work fine:

Here is the corresponding code. The first part is taken from the keras codebase, scroll down to the NEW CODE comment. If I get the code to work, I would be interested in integrating it into keras. So I'm trying to integrate my code into their image preprocessing infrastructure:

def random_rotation_with_boxes(x, boxes, rg, row_axis=1, col_axis=2, channel_axis=0,

fill_mode='nearest', cval=0.):

"""Performs a random rotation of a Numpy image tensor.

Also rotates the corresponding bounding boxes

# Arguments

x: Input tensor. Must be 3D.

boxes: a list of bounding boxes [xmin, ymin, xmax, ymax], values in [0,1].

rg: Rotation range, in degrees.

row_axis: Index of axis for rows in the input tensor.

col_axis: Index of axis for columns in the input tensor.

channel_axis: Index of axis for channels in the input tensor.

fill_mode: Points outside the boundaries of the input

are filled according to the given mode

(one of `{'constant', 'nearest', 'reflect', 'wrap'}`).

cval: Value used for points outside the boundaries

of the input if `mode='constant'`.

# Returns

Rotated Numpy image tensor.

And rotated bounding boxes

"""

# sample parameter for augmentation

theta = np.pi / 180 * np.random.uniform(-rg, rg)

# apply to image

rotation_matrix = np.array([[np.cos(theta), -np.sin(theta), 0],

[np.sin(theta), np.cos(theta), 0],

[0, 0, 1]])

h, w = x.shape[row_axis], x.shape[col_axis]

transform_matrix = transform_matrix_offset_center(rotation_matrix, h, w)

x = apply_transform(x, transform_matrix, channel_axis, fill_mode, cval)

# -------------------------------------------------

# NEW CODE FROM HERE

# -------------------------------------------------

# apply to vertices

vertices = boxes_to_vertices(boxes)

vertices = vertices.reshape((-1, 2))

# apply offset to have pivot point at [0.5, 0.5]

vertices -= [0.5, 0.5]

# apply rotation, we only need the rotation part of the matrix

vertices = np.dot(vertices, rotation_matrix[:2, :2])

vertices += [0.5, 0.5]

boxes = vertices_to_boxes(vertices)

return x, boxes, vertices

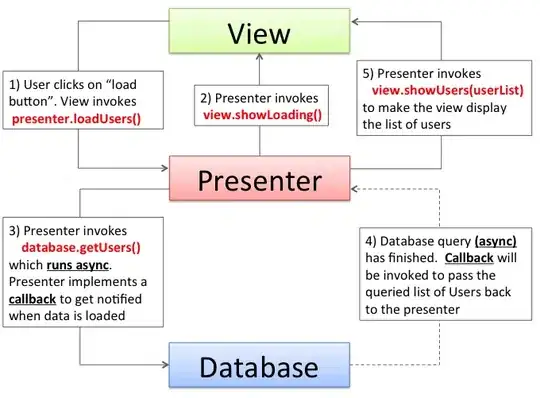

As you can see, they are using scipy.ndimage to apply the transformation to an image.

My bounding boxes have coordinates in [0,1], the center is [0.5, 0.5]. The rotation needs to be applied around [0.5, 0.5] as pivot point. It would be possible to use homogenous coordinates and matrices to shift, rotate and shift the vectors. That's what they do for the image.

There is an existing transform_matrix_offset_center function, but that offsets to float(width)/2 + 0.5. The +0.5 makes this unsuitable for my coordinates in [0, 1]. So I'm shifting the vectors myself.

For rotations, this code works fine. I thought it would be generally applicable.

But for zooming, this fails in a strange way. The code is pretty much identical:

vertices -= [0.5, 0.5]

# apply zoom, we only need the zoom part of the matrix

vertices = np.dot(vertices, zoom_matrix[:2, :2])

vertices += [0.5, 0.5]

The output is this:

There seems to be a variety of problems:

- The shifting is broken. In image 1, the ROI and the corresponding image part almost don't overlap

- The coordinates seem to be switched. In image 2, the ROI and the image seem to be scaled differently along x and y axes.

I tried switching the axes by using (zoom_matrix[:2, :2].T)[::-1, ::-1].

That leads to this:

Now the scale factor is broken? I've tried many different variations on this matrix multiplication, transposing, mirroring, changing the scale factors, etc. I can't seem to get it right.

And in any case, I think that the original code should be correct. It works for rotations, after all. At this point, I'm thinking whether this is a peculiarity of scipy's ndimage resampling ?

Is this a mistake in my math, or is something missing to truly emulate the scipy ndimage resampling ?

I've put the full source code on pastebin. Only small parts are updated by me, actually this is code from keras: https://pastebin.com/tsHnLLgy

The code to use the new augmentations and create these images is here: https://nbviewer.jupyter.org/gist/lhk/b8f30e9f30c5d395b99188a53524c53e

UPDATE:

If the zoom factors are inverted, the transformation works. For zoom, this operation is simple and can be expressed as:

# vertices is an array of shape [number of vertices, 2]

vertices *= [1/zx, 1/zy]

This corresponds to applying the inverse transform to the vertices. In the context of image resampling, this could make sense. An image could be resampled like this

- create a coordinate vector for every pixel.

- apply the inverse transform to every vector

- interpolate the original image to find the value the vector is now pointing at

- write this value to the output image at the original position

But for rotation, I didn't invert the matrix and the operation worked correctly.

The question itself, how to fix this, seems answered. But I don't understand why.