I am trying to run a spark application on spark standalone cluster having total 3 nodes.

there are 3 workers on the cluster and having ram in one node there is 4 GB and rest having 8 GB Ram.

i am executing the same application having different cores like 2,3,4,5 but still the execution time is same to execute the application

i am passing the application to the cluster using sparkclr-submit

can anyone tell me why this can be happening?

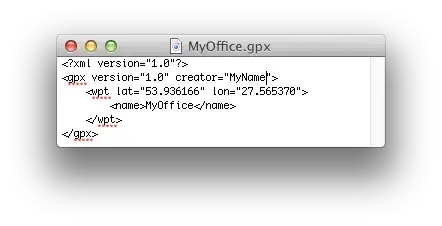

here is the image of sparkUI

Thanks.