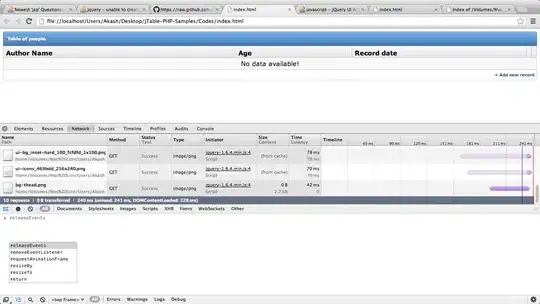

I have a few thousands of PDF files containing B&W images (1bit) from digitalized paper forms. I'm trying to OCR some fields, but sometime the writing is too faint:

I've just learned about morphological transforms. They are really cool!!! I feel like I'm abusing them (like I did with regular expressions when I learned Perl).

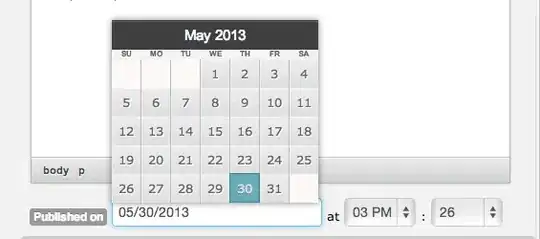

I'm only interested in the date, 07-06-2017:

im = cv2.blur(im, (5, 5))

plt.imshow(im, 'gray')

ret, thresh = cv2.threshold(im, 250, 255, 0)

plt.imshow(~thresh, 'gray')

People filling this form seems to have some disregard for the grid, so I tried to get rid of it. I'm able to isolate the horizontal line with this transform:

horizontal = cv2.morphologyEx(

~thresh,

cv2.MORPH_OPEN,

cv2.getStructuringElement(cv2.MORPH_RECT, (100, 1)),

)

plt.imshow(horizontal, 'gray')

I can get the vertical lines as well:

plt.imshow(horizontal ^ ~thresh, 'gray')

ret, thresh2 = cv2.threshold(roi, 127, 255, 0)

vertical = cv2.morphologyEx(

~thresh2,

cv2.MORPH_OPEN,

cv2.getStructuringElement(cv2.MORPH_RECT, (2, 15)),

iterations=2

)

vertical = cv2.morphologyEx(

~vertical,

cv2.MORPH_ERODE,

cv2.getStructuringElement(cv2.MORPH_RECT, (9, 9))

)

horizontal = cv2.morphologyEx(

~horizontal,

cv2.MORPH_ERODE,

cv2.getStructuringElement(cv2.MORPH_RECT, (7, 7))

)

plt.imshow(vertical & horizontal, 'gray')

Now I can get rid of the grid:

plt.imshow(horizontal & vertical & ~thresh, 'gray')

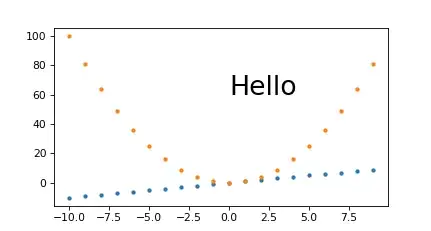

The best I got was this, but the 4 is still split into 2 pieces:

plt.imshow(cv2.morphologyEx(im2, cv2.MORPH_CLOSE,

cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (5, 5))), 'gray')

Probably at this point it is better to use cv2.findContours and some heuristic in order to locate each digit, but I was wondering:

- should I give up and demand all documents to be rescanned in grayscale?

- are there better methods in order to isolate and locate the faint digits?

- do you know any morphological transform to join cases like the "4"?

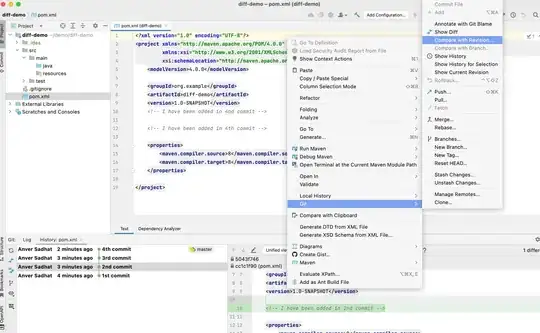

[update]

Is rescanning the documents too demanding? If it is no great trouble I believe it is better to get higher quality inputs than training and trying to refine your model to withstand noisy and atypical data

A bit of context: I'm a nobody working at a public agency in Brazil. The price for ICR solutions start in the 6 digits so nobody believes a single guy can write an ICR solution in-house. I'm naive enough to believe I can prove them wrong. Those PDF documents were sitting at an FTP server (about 100K files) and were scanned just to get rid of the dead tree version. Probably I can get the original form and scan again myself but I would have to ask for some official support - since this is the public sector I would like to keep this project underground as much as I can. What I have now is an error rate of 50%, but if this approach is a dead end there is no point trying to improve it.