I am doing batch ingestion in druid, by using the wikiticker-index.json file which comes with the druid quickstart.

Following is my data schema in wikiticker-index.json file.

{

type:"index_hadoop",

spec:{

ioConfig:{

type:"hadoop",

inputSpec:{

type:"static",

paths:"quickstart/wikiticker-2015-09-12-sampled.json"

}

},

dataSchema:{

dataSource:"wikiticker",

granularitySpec:{

type:"uniform",

segmentGranularity:"day",

queryGranularity:"none",

intervals:[

"2015-09-12/2015-09-13"

]

},

parser:{

type:"hadoopyString",

parseSpec:{

format:"json",

dimensionsSpec:{

dimensions:[

"channel",

"cityName",

"comment",

"countryIsoCode",

"countryName",

"isAnonymous",

"isMinor",

"isNew",

"isRobot",

"isUnpatrolled",

"metroCode",

"namespace",

"page",

"regionIsoCode",

"regionName",

"user"

]

},

timestampSpec:{

format:"auto",

column:"time"

}

}

},

metricsSpec:[

{

name:"count",

type:"count"

},

{

name:"added",

type:"longSum",

fieldName:"added"

},

{

name:"deleted",

type:"longSum",

fieldName:"deleted"

},

{

name:"delta",

type:"longSum",

fieldName:"delta"

},

{

name:"user_unique",

type:"hyperUnique",

fieldName:"user"

}

]

},

tuningConfig:{

type:"hadoop",

partitionsSpec:{

type:"hashed",

targetPartitionSize:5000000

},

jobProperties:{

}

}

}

}

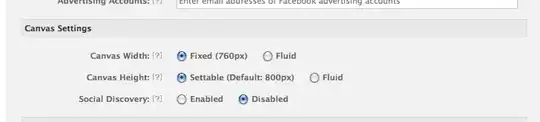

After ingesting the sample json. only the following metrics show up.

I am unable to find the longSum metrics.i.e added, deleted and delta. Any particular reason?

Does anybody know about this?