New to Python and BeautifulSoup. Any help is highly appreciated

I have an idea of how to build one list of a companies info, but that's after clicking on one link.

import requests

from bs4 import BeautifulSoup

url = "http://data-interview.enigmalabs.org/companies/"

r = requests.get(url)

soup = BeautifulSoup(r.content)

links = soup.find_all("a")

link_list = []

for link in links:

print link.get("href"), link.text

g_data = soup.find_all("div",{"class": "table-responsive"})

for link in links:

print link_list.append(link)

Can anyone give an idea of how to go about first scraping the links then building a JSON of all of the company listings data for the site?

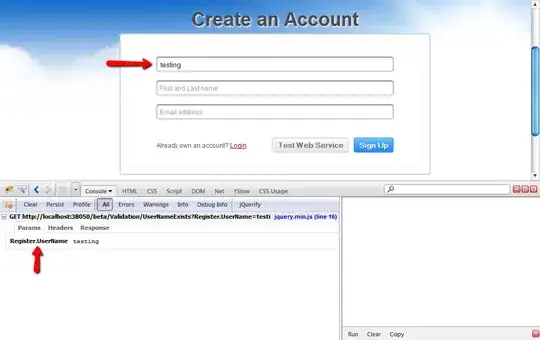

I attached sample images for a better visualization as well.

How would I scrape the site and build a JSON like my example below without having to click on each individual link?

Example Expected Output:

all_listing = [ {"Dickens-Tillman":{'Company Detail':

{'Company Name': 'Dickens-Tillman',

'Address Line 1 ': '7147 Guilford Turnpike Suit816',

'Address Line 2 ': 'Suite 708',

'City': 'Connfurt',

'State': 'Iowa',

'Zipcode ': '22598',

'Phone': '00866539483',

'Company Website ': 'lockman.com',

'Company Description': 'enable robust paradigms'}}},

`{'"Klein-Powlowski" ':{'Company Detail':

{'Company Name': 'Klein-Powlowski',

'Address Line 1 ': '32746 Gaylord Harbors',

'Address Line 2 ': 'Suite 866',

'City': 'Lake Mario',

'State': 'Kentucky',

'Zipcode ': '45517',

'Phone': '1-299-479-5649',

'Company Website ': 'marquardt.biz',

'Company Description': 'monetize scalable paradigms'}}}]

print all_listing`