I'm comparing the results of a logistic regressor written in Keras to the default Sklearn Logreg. My input is one-dimensional. My output has two classes and I'm interested in the probability that the output belongs to the class 1.

I'm expecting the results to be almost identical, but they are not even close.

Here is how I generate my random data. Note that X_train, X_test are still vectors, I'm just using capital letters because I'm used to it. Also there is no need for scaling in this case.

X = np.linspace(0, 1, 10000)

y = np.random.sample(X.shape)

y = np.where(y<X, 1, 0)

Here's cumsum of y plotted over X. Doing a regression here is not rocket science.

I do a standard train-test-split:

X_train, X_test, y_train, y_test = train_test_split(X, y)

X_train = X_train.reshape(-1,1)

X_test = X_test.reshape(-1,1)

Next, I train a default logistic regressor:

from sklearn.linear_model import LogisticRegression

sk_lr = LogisticRegression()

sk_lr.fit(X_train, y_train)

sklearn_logreg_result = sk_lr.predict_proba(X_test)[:,1]

And a logistic regressor that I write in Keras:

from keras.models import Sequential

from keras.layers import Dense

keras_lr = Sequential()

keras_lr.add(Dense(1, activation='sigmoid', input_dim=1))

keras_lr.compile(loss='mse', optimizer='sgd', metrics=['accuracy'])

_ = keras_lr.fit(X_train, y_train, verbose=0)

keras_lr_result = keras_lr.predict(X_test)[:,0]

And a hand-made solution:

pearson_corr = np.corrcoef(X_train.reshape(X_train.shape[0],), y_train)[0,1]

b = pearson_corr * np.std(y_train) / np.std(X_train)

a = np.mean(y_train) - b * np.mean(X_train)

handmade_result = (a + b * X_test)[:,0]

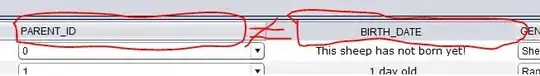

I expect all three to deliver similar results, but here is what happens. This is a reliability diagram using 100 bins.

I have played around with loss functions and other parameters, but the Keras logreg stays roughly like this. What might be causing the problem here?

edit: Using binary crossentropy is not the solution here, as shown by this plot (note that the input data has changed between the two plots).