Good morning

I am trying to fit a sklearn Multilayer Perceptron Regressor to a dataset with about 350 features and 1400 samples, strictly positive targets (house prices). Doing a single-step GridSearch on the hidden-layer size shows that from 5 neurons in the hidden layer up, the Root Mean Square Logarithmic Error does not change significantly at all. I also observed this with two hidden layers, from sizes (1,1) to (70,70). The RMSLE is around 0.23, ridge regression gives me about 0.14 for comparison.

Do any of my hyperparameters cap the performance or is this normal?

mlp = MLPRegressor()

param_grid = {'hidden_layer_sizes': [i for i in range(1,15)],

'activation': ['relu'],

'solver': ['adam'],

'learning_rate': ['constant'],

'learning_rate_init': [0.001],

'power_t': [0.5],

'alpha': [0.0001],

'max_iter': [1000],

'early_stopping': [False],

'warm_start': [False]}

_GS = GridSearchCV(mlp, param_grid=param_grid, scoring=scorer,

cv=kf, verbose=True, pre_dispatch='2*n_jobs')

_GS.fit(X, y)

I use a custom Root Mean Square Logarithmic Error scoring function (yes, poor handling of negative predictions):

def rmsle_vector(y, y0):

assert len(y) == len(y0)

y0[y0 <= 0] = 0.000000001 # assert prediction > 0

return np.sqrt(np.mean(np.power(np.log1p(y)-np.log1p(y0), 2))) *-1

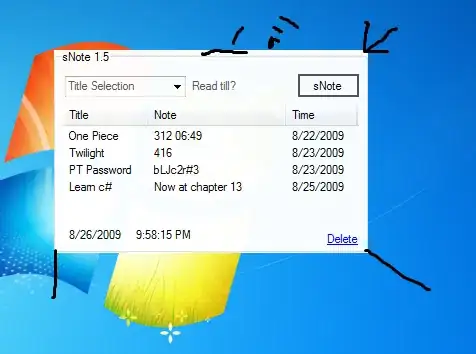

The search results: