I've been using h2o.gbm for a classification problem, and wanted to understand a bit more about how it calculates the class probabilities. As a starting point, I tried to recalculate the class probability of a gbm with only 1 tree (by looking at the observations in the leafs), but the results are very confusing.

Let's assume my positive class variable is "buy" and negative class variable "not_buy" and I have a training set called "dt.train" and a separate test-set called "dt.test".

In a normal decision tree, the class probability for "buy" P(has_bought="buy") for a new data row (test-data) is calculated by dividing all observations in the leaf with class "buy" by the total number of observations in the leaf (based on the training data used to grow the tree).

However, the h2o.gbm seems to do something differently, even when I simulate a 'normal' decision tree (setting n.trees to 1, and alle sample.rates to 1). I think the best way to illustrate this confusion is by telling what I did in a step-wise fashion.

Step 1: Training the model

I do not care about overfitting or model performance. I want to make my life as easy as possible, so I've set the n.trees to 1, and make sure all training-data (rows and columns) are used for each tree and split, by setting all sample.rate parameters to 1. Below is the code to train the model.

base.gbm.model <- h2o.gbm(

x = predictors,

y = "has_bought",

training_frame = dt.train,

model_id = "2",

nfolds = 0,

ntrees = 1,

learn_rate = 0.001,

max_depth = 15,

sample_rate = 1,

col_sample_rate = 1,

col_sample_rate_per_tree = 1,

seed = 123456,

keep_cross_validation_predictions = TRUE,

stopping_rounds = 10,

stopping_tolerance = 0,

stopping_metric = "AUC",

score_tree_interval = 0

)

Step 2: Getting the leaf assignments of the training set

What I want to do, is use the same data that is used to train the model, and understand in which leaf they ended up in. H2o offers a function for this, which is shown below.

train.leafs <- h2o.predict_leaf_node_assignment(base.gbm.model, dt.train)

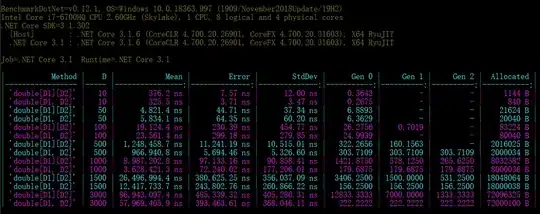

This will return the leaf node assignment (e.g. "LLRRLL") for each row in the training data. As we only have 1 tree, this column is called "T1.C1" which I renamed to "leaf_node", which I cbind with the target variable "has_bought" of the training data. This results in the output below (from here on referred to as "train.leafs").

Step 3: Making predictions on the test set

For the test set, I want to predict two things:

- The prediction of the model itself P(has_bought="buy")

The leaf node assignment according to the model.

test.leafs <- h2o.predict_leaf_node_assignment(base.gbm.model, dt.test) test.pred <- h2o.predict(base.gbm.model, dt.test)

After finding this, I've used cbind to combine these two predictions with the target variable of the test-set.

test.total <- h2o.cbind(dt.test[, c("has_bought")], test.pred, test.leafs)

The result of this, is the table below, from here on referred to as "test.total"

Unfortunately, I do not have enough rep point to post more than 2 links. But if you click on "table "test.total" combined with manual probability calculation" in step 5, it's basically the same table without the column "manual_prob_buy".

Step 4: Manually predicting probabilities

Theoretically, I should be able to predict the probabilities now myself. I did this by writing a loop, that loops over each row in "test.total". For each row, I take the leaf node assignment.

I then use that leaf-node assignment to filter the table "train.leafs", and check how many observations have a positive class (has_bought == 1) (posN) and how many observations are there in total (totalN) within the leaf associated with the test-row.

I perform the (standard) calculation posN / totalN, and store this in the test-row as a new column called "manual_prob_buy", which should be the probability of P(has_bought="buy") for that leaf. Thus, each test-row that falls in this leaf should get this probability. This for-loop is shown below.

for(i in 1:nrow(dt.test)){

leaf <- test.total[i, leaf_node]

totalN <- nrow(train.leafs[train.leafs$leaf_node == leaf])

posN <- nrow(train.leafs[train.leafs$leaf_node == leaf & train.leafs$has_bought == "buy",])

test.total[i, manual_prob_buy := posN / totalN]

}

Step 5: Comparing the probabilities

This is where I get confused. Below is the the updated "test.total" table, in which "buy" represents the probability P(has_bought="buy") according to the model and "manual_prob_buy" represents the manually calculated probability from step 4. As for as I know, these probabilities should be identical, knowing I only used 1 tree and I've set the sample.rates to 1.

Table "test.total" combined with manual probability calculation

The Question

I just don't understand why these two probabilities are not the same. As far as I know, I've set the parameters in such a way that it should just be like a 'normal' classification tree.

So the question: does anyone know why I find differences in these probabilities?

I hope someone could point me to where I might have made wrong assumptions. I just really hope I did something stupid, as this is driving me crazy.

Thanks!