I am trying to convert csv files stored in azure data lake store into avro files with created scheme. Is there any kind of example source code which has same purpose?

-

1Is the question still relevant? if so, can you provide more details: 1. how csv shall be converted to avro: shall each field type be inferred somehow, or can you say all field types are number or string. Do you want it to be a field per csv column or an avro array for each row? 2. which language do you want to use? is C OK for that? – Eliyahu Machluf Sep 12 '19 at 09:59

-

If you are looking to work with a pre-created schema and use it to convert ```csv``` files into ```Avro```, I think ```apache``` does offer libraries for it. – Amit Singh Sep 12 '19 at 14:24

3 Answers

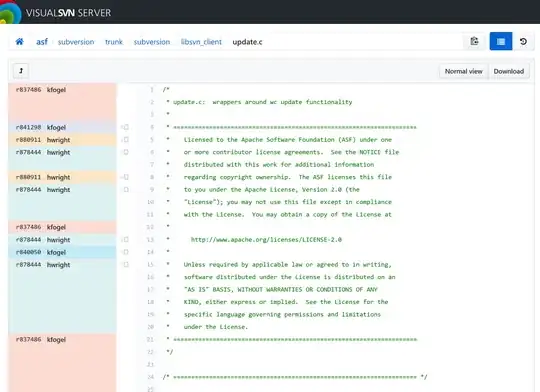

You can use Azure Data Lake Analytics for this. There is a sample Avro extractor at https://github.com/Azure/usql/blob/master/Examples/DataFormats/Microsoft.Analytics.Samples.Formats/Avro/AvroExtractor.cs. You can easily adapt the code into an outputter.

Another possibility is to fire up an HDInsight cluster on top of your data lake store and use Pig, Hive or Spark.

- 749

- 3

- 5

-

he asked for an avro outputter but you showed an avro reader. any recommnedations ? – Alex Gordon Sep 08 '19 at 18:59

That's actually pretty straightforward to do with Azure Data Factory and Blob Storage. This should be also very cheap because you pay per second when executing in ADF so you only pay for conversion time. No infra required.

If your CSV looks like this

ID,Name,Surname

1,Adam,Marczak

2,Tom,Kowalski

3,John,Johnson

Upload it to blob storage into input container

Add linked service for blob storage in ADF

Select your storage

Add dataset

Of blob type

And set it to CSV format

With values as such

Add another dataset

Of blob type

And select Avro type

With value likes

Add pipeline

Drag-n-drop Copy Data activity

And in the source select your CSV input dataset

And in the sink select your target Avro dataset

And publish and trigger the pipeline

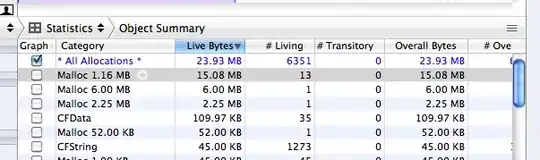

With output

And on the blob

And with inspection you can see Avro file

Full github code here https://github.com/MarczakIO/azure-datafactory-csv-to-avro

If you want to learn about data factory check out ADF introduction video https://youtu.be/EpDkxTHAhOs

And if you want to dynamically pass input and output paths to blob files check out video on parametrization of ADF video https://youtu.be/pISBgwrdxPM

- 2,257

- 9

- 20

Python is always your best friend. Please use this sample code to convert csv to avro:

Install these dependencies:

pip install fastavro

pip install pandas

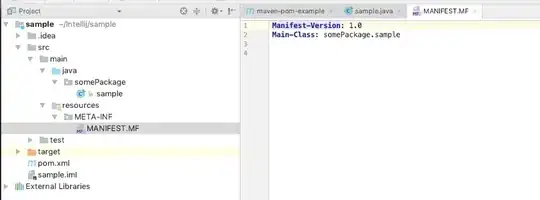

Execute the following python script.

from fastavro import writer, parse_schema

import pandas as pd

# Read CSV

df = pd.read_csv('sample.csv')

# Define AVRO schema

schema = {

'doc': 'Documentation',

'name': 'Subject',

'namespace': 'test',

'type': 'record',

'fields': [{'name': c, 'type': 'string'} for c in df.columns]

}

parsed_schema = parse_schema(schema)

# Writing AVRO file

with open('sample.avro', 'wb') as out:

writer(out, parsed_schema, df.to_dict('records'))

input: sample.csv

col1,col2,col3

a,b,c

d,e,f

g,h,i

output: sample.avro

Objavro.codecnullavro.schemaƒ{"type": "record", "name": "test.Subject", "fields": [{"name": "col1", "type": "string"}, {"name": "col2", "type": "string"}, {"name": "col3", "type": "string"}]}Y«Ÿ>[Ú Ÿÿ Æ?âQI$abcdefghiY«Ÿ>[Ú Ÿÿ Æ?âQI

- 33

- 4

-

Can you please add how can emkay run this on Azure as this was part of the question. – Adam Marczak Sep 16 '19 at 05:36