I am trying to fit a very simple linear regression model using tensorflow. However, the loss (mean squared error) blows up instead of reducing to zero.

First, I generate my data:

x_data = np.random.uniform(high=10,low=0,size=100)

y_data = 3.5 * x_data -4 + np.random.normal(loc=0, scale=2,size=100)

Then, I define the computational graph:

X = tf.placeholder(dtype=tf.float32, shape=100)

Y = tf.placeholder(dtype=tf.float32, shape=100)

m = tf.Variable(1.0)

c = tf.Variable(1.0)

Ypred = m*X + c

loss = tf.reduce_mean(tf.square(Ypred - Y))

optimizer = tf.train.GradientDescentOptimizer(learning_rate=.1)

train = optimizer.minimize(loss)

Finally, run it for 100 epochs:

steps = {}

steps['m'] = []

steps['c'] = []

losses=[]

for k in range(100):

_m = session.run(m)

_c = session.run(c)

_l = session.run(loss, feed_dict={X: x_data, Y:y_data})

session.run(train, feed_dict={X: x_data, Y:y_data})

steps['m'].append(_m)

steps['c'].append(_c)

losses.append(_l)

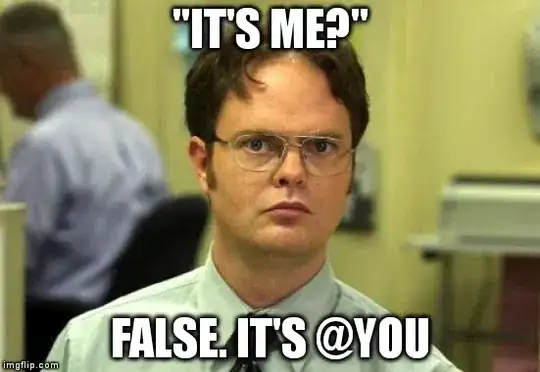

However, when I plot the losses, I get:

The complete code can also be found here.

![Plot of losses[![][1]](../../images/3817933957.webp)