I am downloading Sentinel-2 images with the following code

import boto3

s3 = boto3.resource('s3', region_name='us-east-2')

bucket = s3.Bucket('sentinel-s2-l1c')

path = 'tiles/36/R/UU/2017/5/14/0/'

object = bucket.Object(path + 'B02.jp2')

object.download_file('B02.jp2')

object = bucket.Object(path + 'B03.jp2')

object.download_file('B03.jp2')

object = bucket.Object(path + 'B04.jp2')

object.download_file('B04.jp2')

and I get 3 grayscale JP2 images on disk.

Then I am trying to mix color layers with the following code

import matplotlib.image as mpimg

import numpy as np

from PIL import Image

Image.MAX_IMAGE_PIXELS = 1000000000

print('Reading B04.jp2...')

img_red = mpimg.imread('B04.jp2')

print('Reading B03.jp2...')

img_green = mpimg.imread('B03.jp2')

print('Reading B02.jp2...')

img_blue = mpimg.imread('B02.jp2')

img = np.dstack((img_red, img_green, img_blue))

img = np.divide(img, 256)

img = img.astype(np.uint8)

mpimg.imsave('MIX.jpeg', img, format='jpg')

Result looks very poor, very dimmed and nearly black and white.

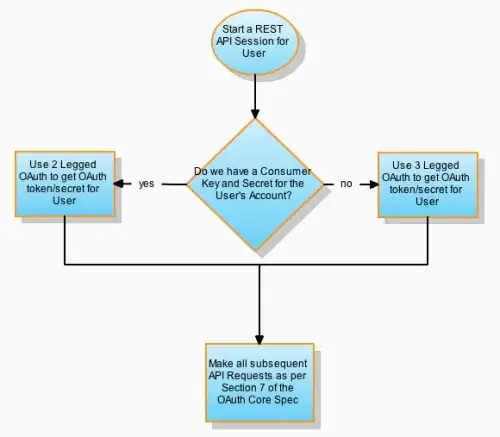

I would like something like this:

or like preview

while my version is

(sorry)

UDPATE

I found that images probably 12-bit. When I tried 12, I saw overexposure. So experimentally I found the best quelity is for 14 bitness.

UDPATE 2

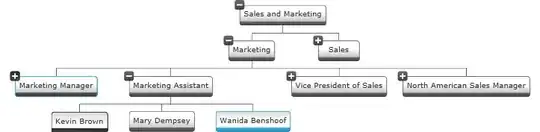

Although even with 14 bits I have small areas of overexposure. Here are Bahamas: