I am using VirualBox to run Ubuntu 14 VM on Windows laptop. I have configured Apache distribution HDFS and YARN for Single Node. When I run dfs and YARN then all required demons are running. When I don't configure YARN and run DFS only then I can execute MapReduce job successfully, But when I run YARN as well then job get stuck at ACCEPTED state, I tried many settings regarding changing memory settings of node but no luck. Following link I followed to set single node https://hadoop.apache.org/docs/r2.8.0/hadoop-project-dist/hadoop-common/SingleCluster.html

core-site.xml

`

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>`

settings of hdfs-site.xml`

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>/home/shaileshraj/hadoop/name/data</value>

</property>

</configuration>`

settings of mapred-site.xml

`<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>`

settings of yarn-site.xml`

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>2200</value>

<description>Amount of physical memory, in MB, that can be allocated for containers.</description>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>500</value>

</property>

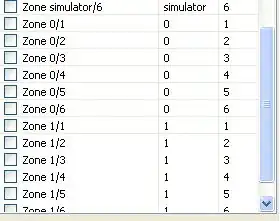

Here is Application Master screen of RM Web UI. What I can see AM container is not allocated, may be that is problem