I am using the Librosa library for pitch and onset detection. Specifically, I am using onset_detect and piptrack.

This is my code:

def detect_pitch(y, sr, onset_offset=5, fmin=75, fmax=1400):

y = highpass_filter(y, sr)

onset_frames = librosa.onset.onset_detect(y=y, sr=sr)

pitches, magnitudes = librosa.piptrack(y=y, sr=sr, fmin=fmin, fmax=fmax)

notes = []

for i in range(0, len(onset_frames)):

onset = onset_frames[i] + onset_offset

index = magnitudes[:, onset].argmax()

pitch = pitches[index, onset]

if (pitch != 0):

notes.append(librosa.hz_to_note(pitch))

return notes

def highpass_filter(y, sr):

filter_stop_freq = 70 # Hz

filter_pass_freq = 100 # Hz

filter_order = 1001

# High-pass filter

nyquist_rate = sr / 2.

desired = (0, 0, 1, 1)

bands = (0, filter_stop_freq, filter_pass_freq, nyquist_rate)

filter_coefs = signal.firls(filter_order, bands, desired, nyq=nyquist_rate)

# Apply high-pass filter

filtered_audio = signal.filtfilt(filter_coefs, [1], y)

return filtered_audio

When running this on guitar audio samples recorded in a studio, therefore samples without noise (like this), I get very good results in both functions. The onset times are correct and the frequencies are almost always correct (with some octave errors sometimes).

However, a big problem arises when I try to record my own guitar sounds with my cheap microphone. I get audio files with noise, such as this. The onset_detect algorithm gets confused and thinks that noise contains onset times. Therefore, I get very bad results. I get many onset times even if my audio file consists of one note.

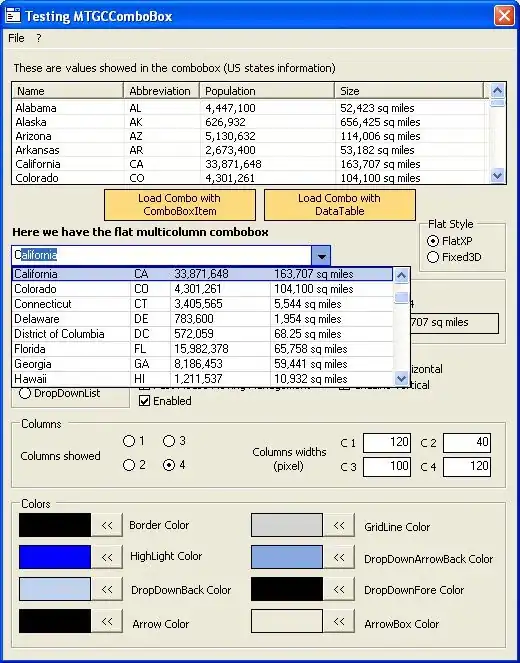

Here are two waveforms. The first is of a guitar sample of a B3 note recorded in a studio, whereas the second is my recording of an E2 note.

The result of the first is correctly B3 (the one onset time was detected). The result of the second is an array of 7 elements, which means that 7 onset times were detected, instead of 1! One of those elements is the correct onset time, other elements are just random peaks in the noise part.

Another example is this audio file containing the notes B3, C4, D4, E4:

As you can see, the noise is clear and my high-pass filter has not helped (this is the waveform after applying the filter).

I assume this is a matter of noise, as the difference between those files lies there. If yes, what could I do to reduce it? I have tried using a high-pass filter but there is no change.