NB: The YUV <-> RGB conversions in OpenCV versions prior to 3.2.0 are buggy! For one, in many cases the order of U and V channels was swapped. As far as I can tell, 2.x is still broken as of 2.4.13.2 release.

The reason they appear grayscale is that in splitting the 3-channel YUV image you created three 1-channel images. Since the data structures that contain the pixels do not store any information about what the values represent, imshow treats any 1-channel image as grayscale for display. Similarly, it would treat any 3-channel image as BGR.

What you see in the Wikipedia example is a false color rendering of the chrominance channels. In order to achieve this, you need to either apply a pre-defined colormap or use a custom look-up table (LUT). This will map the U and V values to appropriate BGR values which can then be displayed.

As it turns out, the colormaps used for the Wikipedia example are rather simple.

Colormap for U channel

Simple progression between green and blue:

colormap_u = np.array([[[i,255-i,0] for i in range(256)]],dtype=np.uint8)

Colormap for V channel

Simple progression between green and red:

colormap_v = np.array([[[0,255-i,i] for i in range(256)]],dtype=np.uint8)

Visualizing YUV Like the Example

Now, we can put it all together, to recreate the example:

import cv2

import numpy as np

def make_lut_u():

return np.array([[[i,255-i,0] for i in range(256)]],dtype=np.uint8)

def make_lut_v():

return np.array([[[0,255-i,i] for i in range(256)]],dtype=np.uint8)

img = cv2.imread('shed.png')

img_yuv = cv2.cvtColor(img, cv2.COLOR_BGR2YUV)

y, u, v = cv2.split(img_yuv)

lut_u, lut_v = make_lut_u(), make_lut_v()

# Convert back to BGR so we can apply the LUT and stack the images

y = cv2.cvtColor(y, cv2.COLOR_GRAY2BGR)

u = cv2.cvtColor(u, cv2.COLOR_GRAY2BGR)

v = cv2.cvtColor(v, cv2.COLOR_GRAY2BGR)

u_mapped = cv2.LUT(u, lut_u)

v_mapped = cv2.LUT(v, lut_v)

result = np.vstack([img, y, u_mapped, v_mapped])

cv2.imwrite('shed_combo.png', result)

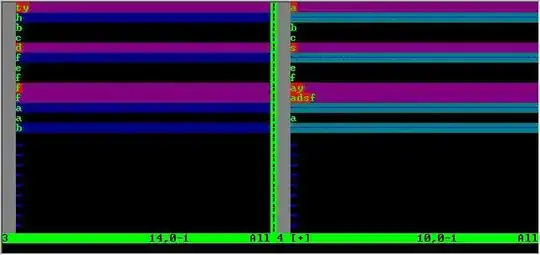

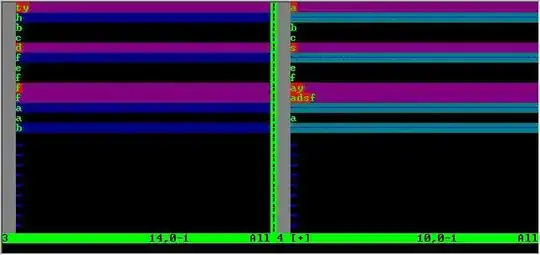

Result: