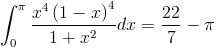

I am parsing a data in order to get some sense out of it through MapReduce job. The parsed data comes in form of batches. It is further loaded to hive external table through spark streaming job. This is a real time process. Now an unusual event was faced by me today as a _temporary directory got created in output location, due to which loading into hive table failed as a directory can't be loaded into hive table. It happened only once and rest of the jobs are running fine. Please refer the screenshot.

_temporary directory further contains task IDs as sub-directories which are empty. Can anyone please help in resolving this so that it could be avoided in future.