I am woking on HDFS and setting the replication factor to 1 in hfs-site.xml as follows:

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/Users/***/Documnent/hDir/hdfs/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/Users/***/Documnent/hDir/hdfs/datanode</value >

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

But when i tried copying a file from local system to the hdfs file system, i found that the replication factor for that file was 3. Here is the code which is copying the file on hdfs:

public class FileCopyWithWrite {

public static void main(String[] args) {

// TODO Auto-generated method stub

String localSrc = "/Users/***/Documents/hContent/input/docs/1400-8.txt";

String dst = "hdfs://localhost/books/1400-8.txt";

try{

InputStream in = new BufferedInputStream(new FileInputStream(localSrc));

Configuration conf = new Configuration();;

FileSystem fs = FileSystem.get(URI.create(dst), conf);

OutputStream out = fs.create(new Path(dst), new Progressable() {

public void progress() {

// TODO Auto-generated method stub

System.out.println(".");

}

});

IOUtils.copyBytes(in, out, 4092, true);

}catch(Exception e){

e.printStackTrace();

}

}

}

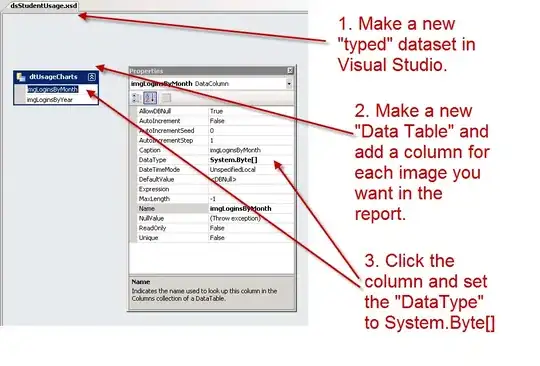

Please find this screenshot showing the replication factor as 3:

Note that i have started in pseudo-distributed mode and i have updated the hdfs-site.xml according to the documentation in Hadoop The Definite Guide book. Any suggestion on why this is happening?