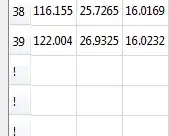

Has anyone experienced something similar or can point to the where the issue might be in the code below? For some reason transparent "dots" appear in the screenshot. It's not all frames maybe every second.

I'm seeing some weird behaviour with the Adreno 530 GPU (LG G6, Google Pixel), other GPUs work fine. I think there's some threading issues, because it works fine on the main thread. Here's the deal.

I have a native Android plugin that takes screenshot of a Unity project (OpenGLES3). The OpenGL context is copied from Unity in a background thread. The constructor looks like this:

this.handlerThread = new HandlerThread("UnityScreenCapture");

this.handlerThread.start();

this.handler = new Handler(handlerThread.getLooper());

final EGLContext currentContext = EGL14.eglGetCurrentContext();

final EGLDisplay currentDisplay = EGL14.eglGetCurrentDisplay();

final int[] eglContextClientVersion = new int[1];

eglQueryContext(currentDisplay,

currentContext,

EGL14.EGL_CONTEXT_CLIENT_VERSION,

eglContextClientVersion,

0

);

this.handler.post(new Runnable() {

@Override

public void run() {

final EGLDisplay eglDisplay = eglGetDisplay(EGL14.EGL_DEFAULT_DISPLAY);

final int[] version = new int[2];

eglInitialize(eglDisplay, version, 0, version, 1);

int[] configAttributes = {EGL14.EGL_COLOR_BUFFER_TYPE,

EGL14.EGL_RGB_BUFFER,

EGL14.EGL_LEVEL,

0,

EGL14.EGL_RENDERABLE_TYPE,

EGL14.EGL_OPENGL_ES2_BIT,

EGL14.EGL_SURFACE_TYPE,

EGL14.EGL_PBUFFER_BIT,

EGL14.EGL_NONE};

final EGLConfig[] eglConfigs = new EGLConfig[1];

final int[] numConfig = new int[1];

eglChooseConfig(eglDisplay, configAttributes, 0, eglConfigs, 0, 1, numConfig, 0);

final EGLConfig eglConfig = eglConfigs[0];

int[] surfaceAttributes = {EGL14.EGL_WIDTH, 1, EGL14.EGL_HEIGHT, 1, EGL14.EGL_NONE};

EGLSurface eglSurface = eglCreatePbufferSurface(eglDisplay,

eglConfig,

surfaceAttributes,

0

);

int[] contextAttributes = {EGL14.EGL_CONTEXT_CLIENT_VERSION,

eglContextClientVersion[0],

EGL14.EGL_NONE};

final EGLContext eglContext = eglCreateContext(eglDisplay,

eglConfig,

currentContext,

contextAttributes,

0

);

eglMakeCurrent(eglDisplay, eglSurface, eglSurface, eglContext);

}

});

}

Then when I'm taking a screenshot I call the same background thread to avoid blocking main:

handler.post(new Runnable() {

@Override

public void run() {

frame.setBytes(native_readFrame(frame.getBytes()));

callback.capturedFrame(frame);

}

});

native_readFrame looks like this (stripped down):

mcluint texture = capture_context->texture;

if (capture_context->framebuffer == 0) {

glBindTexture(GL_TEXTURE_2D, texture);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_REPEAT);

mcluint framebuffer;

// Generate framebuffer

glGenFramebuffers(1, &framebuffer);

// Bind texture to framebuffer

glBindFramebuffer(GL_FRAMEBUFFER, framebuffer);

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, texture, 0);

capture_context->framebuffer = framebuffer;

}

int width = capture_context->width;

int height = capture_context->height;

rect_t crop = capture_context->crop;

glBindTexture(GL_TEXTURE_2D, texture);

glBindFramebuffer(GL_FRAMEBUFFER, capture_context->framebuffer);

mclbyte *pixels = NULL;

if (dst != NULL) {

pixels = *dst;

} else {

pixels = (mclbyte *) malloc(crop.width * crop.height * sizeof(mclbyte) * 4);

}

glReadPixels(crop.x, crop.y, width, height, GL_RGBA, GL_UNSIGNED_BYTE,

pixels);

if (length != NULL) {

*length = crop.width * crop.height * sizeof(mclbyte) * 4;

}

return pixels;

UPDATE If I call glFinish() on main right before readFrame, it works. But glFinish is a heavy operation blocking the main thread for 10ms which is not ideal.