I'm trying to implement a three phase parallel scan as described in chapter 8 of Programming Massively Parallel Processors 3rd edition (there are any line of code but only instructions). This algorithm allow to use only 1 block with the maximum number of threads in the block, and it is limited by the size of shared memory because all elements must to fit into the shared memory

After some debugging, I encoutered a problem during the sum in Phase 3 (see the code below) when I use a big number of elements, for instance 8192, and more than 1 thread.

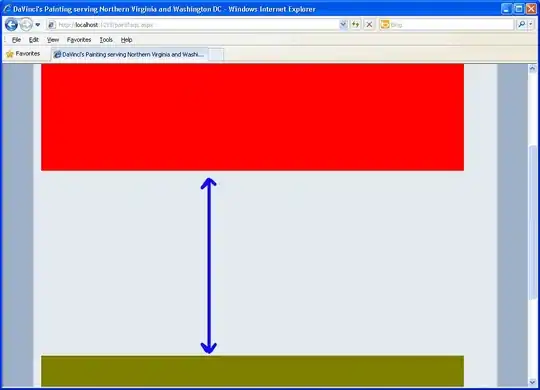

The graphic concept of the algorith is the follow:

And below you can see the kernel code:

__global__

void efficient_Kogge_Stone_scan_kernel(float *X, float *Y, int InputSize) {

__shared__ float XY[SECTION_SIZE];

__shared__ float AUS[BLOCK_DIM];

//int i = blockIdx.x * blockDim.x + threadIdx.x;

// Keep mind: Partition the input into blockDim.x subsections: i.e. for 8 threads --> 8 subsections

// collaborative load in a coalesced manner

for (int j = 0; j < SECTION_SIZE; j += blockDim.x) {

XY[threadIdx.x + j] = X[threadIdx.x + j];

}

__syncthreads();

// PHASE 1: scan inner own subsection

// At the end of this phase the last element of each subsection contains the sum of all alements in own subsection

for (int j = 1; j < SUBSECTION_SIZE; j++) {

XY[threadIdx.x * (SUBSECTION_SIZE)+j] += XY[threadIdx.x * (SUBSECTION_SIZE)+j - 1];

}

__syncthreads();

// PHASE 2: perform iterative kogge_stone_scan of the last elements of each subsections of XY loaded first in AUS

AUS[threadIdx.x] = XY[threadIdx.x * (SUBSECTION_SIZE)+(SUBSECTION_SIZE)-1];

float in;

for (unsigned int stride = 1; stride < blockDim.x; stride *= 2) {

__syncthreads();

if (threadIdx.x >= stride) {

in = AUS[threadIdx.x - stride];

}

__syncthreads();

if (threadIdx.x >= stride) {

AUS[threadIdx.x] += in;

}

}

__syncthreads();

// PHASE 3: each thread adds to its elements the new value of the last element of its predecessor's section

if (threadIdx.x > 0) {

for (unsigned int stride = 0; stride < (SUBSECTION_SIZE); stride++) {

XY[threadIdx.x * (SUBSECTION_SIZE)+stride] += AUS[threadIdx.x - 1]; // <--

}

}

__syncthreads();

// store the result into output vector

for (int j = 0; j < SECTION_SIZE; j += blockDim.x) {

Y[threadIdx.x + j] = XY[threadIdx.x + j];

}

}

If I use one thread in the block and 8192 elements, it works perfectly, but if I use more then one thread, result is wrong in XY[5793] (or X[5793] when it finished and stored the results). With 4096 elements and one or more threads up to 1024 threads it works. If I use int instead of float numbers, it works even with 8192 elements with one or more threads.

I tryed to verify also in MATLAB and these are the outputs comparison:

- X[5973] = 16788115 ---- MATLAB

- X[5973] = 16788114 ---- CPU

- X[5973] = 16788116 ---- GPU

As we can see, also the CPU result is different than MATLAB, so after these results I think that the problem is about the floating point addition, but I tell you that I filled the input array with ordered "x.00" float numbers (for instance {1.00, 2.00, 3.00, 4.00 ..... 8192.00}).

Another problem is about the performance, the host code is always faster than the kernel code, with these configuration parameters and these inputs, is it normal?

If you need for the entire source code you can find it here