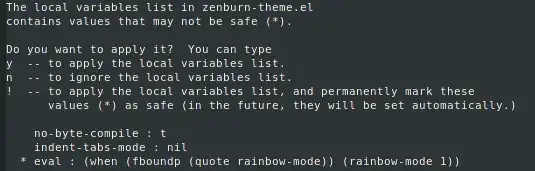

We are using spark ML for Logistics Regression. While executing the code in spark for 1GB of input data, when the code enters Logistics Regression, it creates high number of stages and at each stage takes approximately 2.8 GB of input which is causing total input to reach approximately 700 GB. Below is the sample code that is calling logistic Regression spark ml api:

var lRModel: LogisticRegressionModel = null

try {

var logisticRegression = new LogisticRegression()

//logisticRegression.setMaxIter(10)

lRModel = logisticRegression.fit(logisticRegressionInputDF)

} catch {

case ex:Exception => {

throw new ModellingUJTransformationException("Exception while fitting logistic regression model on a LR input dataframe --"+ex.getMessage, ex)

}

}

Also, find attached the DAG at that stage: