Good morning,

In Python, I have a dictionary (called packet_size_dist) with the following values:

34 => 0.00909909009099

42 => 0.02299770023

54 => 0.578742125787

58 => 0.211278872113

62 => 0.00529947005299

66 => 0.031796820318

70 => 0.0530946905309

74 => 0.0876912308769

Notice that the sum of the values == 1.

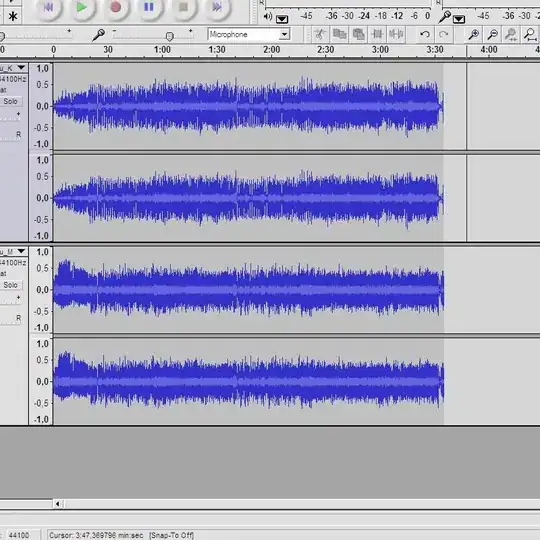

I am attempting to generate a CDF, which I successfully do, but it looks wrong and I am wondering if I am going about generating it incorrectly. The code in question is:

sorted_p = sorted(packet_size_dist.items(), key=operator.itemgetter(0))

yvals = np.arange(len(sorted_p))/float(len(sorted_p))

plt.plot(sorted_p, yvals)

plt.show()

But the resulting graph looks like this:

Which doesn't seem to quite match the values in the dictionary. Any ideas? I also see a vague green line towards the left of the graph, which I don't know what it is. For example, the graph is depicting that a packet size of 70 occurs about 78% of the time, when in my dictionary it is represented as occurring 5% of the time.