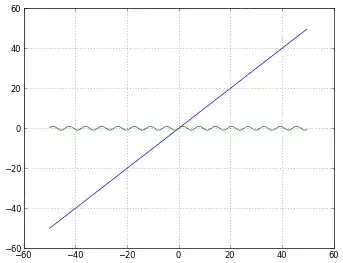

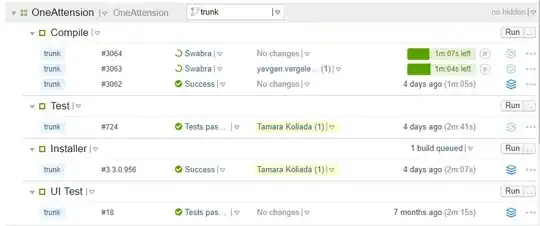

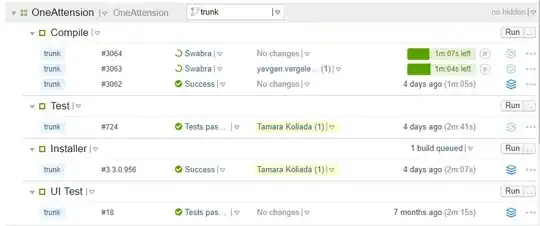

I tried to display the weights like so only the first 25. I have the same question that you do is this the filter or something else. It doesn't seem to be the same filters that are derived from deep belief networks or stacked RBM's.

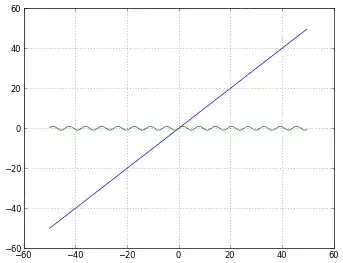

Here is the untrained visualized weights:

and here are the trained weights:

Strangely there is no change after training! If you compare them they are identical.

and then the DBN RBM filters layer 1 on top and layer 2 on bottom:

If i set kernel_intialization="ones" then I get filters that look good but the net loss never decreases though with many trial and error changes:

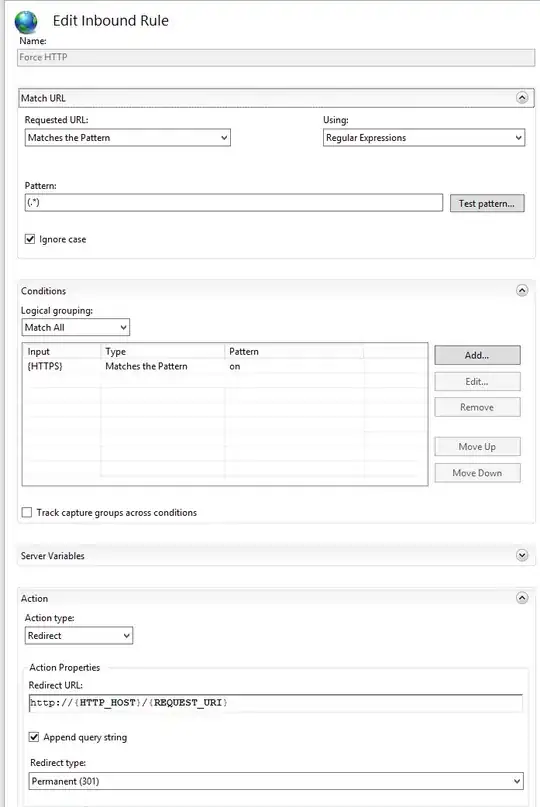

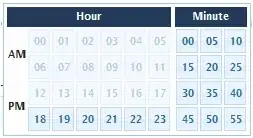

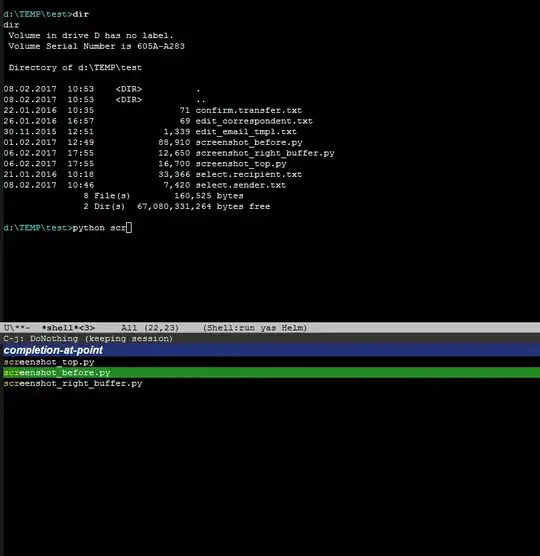

Here is the code to display the 2D Conv Weights / Filters.

ann = Sequential()

x = Conv2D(filters=64,kernel_size=(5,5),input_shape=(32,32,3))

ann.add(x)

ann.add(Activation("relu"))

...

x1w = x.get_weights()[0][:,:,0,:]

for i in range(1,26):

plt.subplot(5,5,i)

plt.imshow(x1w[:,:,i],interpolation="nearest",cmap="gray")

plt.show()

ann.fit(Xtrain, ytrain_indicator, epochs=5, batch_size=32)

x1w = x.get_weights()[0][:,:,0,:]

for i in range(1,26):

plt.subplot(5,5,i)

plt.imshow(x1w[:,:,i],interpolation="nearest",cmap="gray")

plt.show()

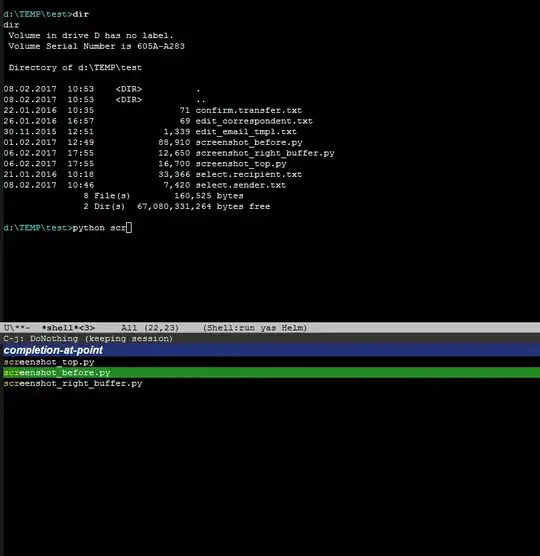

---------------------------UPDATE------------------------

So I tried it again with a learning rate of 0.01 instead of 1e-6 and used the images normalized between 0 and 1 instead of 0 and 255 by dividing the images by 255.0. Now the convolution filters are changing and the output of the first convolutional filter looks like so:

The trained filter you'll notice is changed (not by much) with a reasonable learning rate:

Here is image seven of the CIFAR-10 test set:

And here is the output of the first convolution layer:

And if I take the last convolution layer (no dense layers in between) and feed it to a classifier untrained it is similar to classifying raw images in terms of accuracy but if I train the convolution layers the last convolution layer output increases the accuracy of the classifier (random forest).

So I would conclude the convolution layers are indeed filters as well as weights.