I use Q-learning with neural network as approimator. And after several training iteration, weights acquire values in the range from 0 to 10. Can the weights take such values? Or does this indicate bad network parameters?

Asked

Active

Viewed 3,169 times

4

-

Why do you think weights that size matter exactly? – Thomas Wagenaar Apr 06 '17 at 11:22

-

1I've never seen that weights take values greater than 1, so it seems to me that I'm doing something wrong. – Apr 06 '17 at 11:26

-

Hmm, I have worked with neural networks in javascript and I haven't heard of weight restriction yet. I have seen weights like -600 or +800 in extremely backpropagated networks. Also strange that you don't mention negative weights. What programming language are you using? – Thomas Wagenaar Apr 06 '17 at 12:13

-

@ThomasW i do have negative weights. If you say that weights like -600 and +800 its ok,so i have nothing to worry about? – Apr 06 '17 at 12:34

-

I think it isn't a problem. If you google for 'neural network weights' https://www.google.nl/search?q=neural+networks+weights&source=lnms&tbm=isch the first few images indeed show weights between 0 and 1, but dig a little deeper and you may find many pictures with weights larger dan 1. (e.g. https://derrickmartins.files.wordpress.com/2015/05/annplot01.png) – Thomas Wagenaar Apr 06 '17 at 12:44

-

Weight limits are sometimes applied when training nn using search algorithms (e.g. GA), but not when performing backpropagation. The weight may become especially saturated if the input signal consist of high values – jorgenkg Apr 06 '17 at 14:40

1 Answers

2

Weights can take those values. Especially when you're propagating a large number of iterations; the connections that need to be 'heavy', get 'heavier'.

There are plenty examples showing neural networks with weights larger than 1. Example.

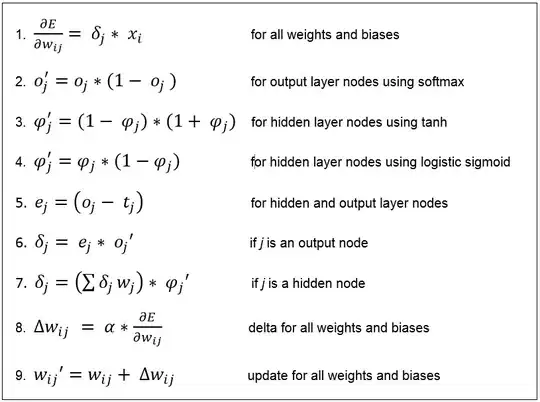

Also, following this image, there is no such thing as weight limits:

Thomas Wagenaar

- 6,489

- 5

- 30

- 73

-

-

Yeah the clarification was needed indeed. I was also freaked out to see my weights grow so much. – corlaez Aug 19 '17 at 15:58