I'm trying to use opencv to find traffic cones in an image.

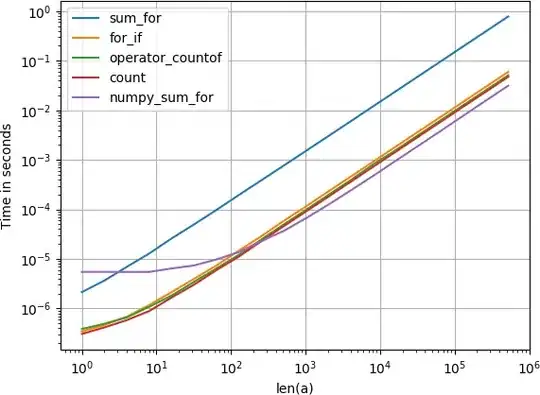

The code uses a variety of methods to crop regions of interest out of the image and find precise bounding boxes for the cones. Something that works quite well is a segmentation by color, then a blob detection and then a nearest neighbour classifier to remove false positives.

Instead of blob detection, I would like to use Haar Cascades. This should mean that regions are selection based on color (HSV segmentation) and shape (Haar cascades).

I used this tutorial: http://docs.opencv.org/trunk/dc/d88/tutorial_traincascade.html

I'm only looking for a specific type of traffic cones. They have a known size and color. So I made a template for the shape of a traffic cone. Here is the command I used to generate the training samples, the template and one of the positive samples generated for training (the sample was generated at a higher resolution, for training I'm using 20x20 pixels):

opencv_createsamples -vec blue.vec -img singles/blue.png -bg bg.txt -num 10000 -maxidev 25 -maxxangle 0.15 -maxyangle 0.15 -maxzangle 0.15 -w 20 -h 20 -bgcolor 0 -bgthresh 1

The training was done with this command:

opencv_traincascade -data blue_data/ -vec blue.vec -bg bg.txt -numPos 1000 -numNeg 9000 -numStages 10 -numThreads 8 -featureType HAAR -w 20 -h 20 -precalcValBufSize 4096 -precalcIdxBufSize 4096

Training finishes very quickly:

===== TRAINING 3-stage =====

<BEGIN

POS count : consumed 1000 : 1000

NEG count : acceptanceRatio 9000 : 0.00248216

Precalculation time: 18

+----+---------+---------+

| N | HR | FA |

+----+---------+---------+

| 1| 1| 1|

+----+---------+---------+

| 2| 1| 1|

+----+---------+---------+

| 3| 1| 0.199556|

+----+---------+---------+

END>

Training until now has taken 0 days 0 hours 5 minutes 44 seconds.

===== TRAINING 4-stage =====

<BEGIN

POS count : consumed 1000 : 1000

NEG count : acceptanceRatio 0 : 0

Required leaf false alarm rate achieved. Branch training terminated.

Now I've applied this to a test image, the cone in the upper left corner is my template, stamped onto the image.

I don't think that the results look good. The template has been found, but there are lots of false positives. Some of the false positives look rather strange to me. For example: There is one cone on the left. It has a bright base and tip with a black stripe. In color this is yellow and black. This is neatly detected by the classifier. But how, the cone is basically the opposite of the template (I'm not using inverted colors as augmentation for the training samples).

Once the training has finished, there are 3 things you can do to tweak the performance:

- Resizing the input image

- Changing the scaling factor of

detectMultiScale - Changing the

minNeighborsparameter.

None of them work for me. In fact, the above example is almost cherrypicking. For most other settings, the detections find very many false positives or even ignore the template in the corner.

I wanted to take a closer look and cropped a region out of the image. This has been processed with:

cone_cascade.detectMultiScale(crop, scaleFactor=1.0001, minNeighbors=0)

The idea was to make sure that all scales are looked at and all matches are kept.

I don't understand these results at all.

Is there something to keep in mind when using haar cascades ? Am I doing something wrong ?