I am looking for a fast way to set my collection of hdf files into a numpy array where each row is a flattened version of an image. What I exactly mean:

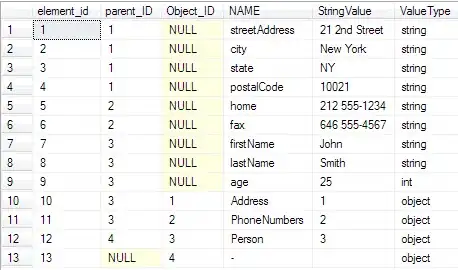

My hdf files store, beside other informations, images per frames. Each file holds 51 frames with 512x424 images. Now I have 300+ hdf files and I want the image pixels to be stored as one single vector per frame, where all frames of all images are stored in one numpy ndarray. The following picture should help to understand:

What I got so far is a very slow method, and I actually have no idea how i can make it faster. The problem is that my final array is called too often, as far as I think. Since I observe that the first files are loaded into the array very fast but speed decreases fast. (observed by printing the number of the current hdf file)

My current code:

os.chdir(os.getcwd()+"\\datasets")

# predefine first row to use vstack later

numpy_data = np.ndarray((1,217088))

# search for all .hdf files

for idx, file in enumerate(glob.glob("*.hdf5")):

f = h5py.File(file, 'r')

# load all img data to imgs (=ndarray, but not flattened)

imgs = f['img']['data'][:]

# iterate over all frames (50)

for frame in range(0, imgs.shape[0]):

print("processing {}/{} (file/frame)".format(idx+1,frame+1))

data = np.array(imgs[frame].flatten())

numpy_data = np.vstack((numpy_data, data))

# delete first row after another is one is stored

if idx == 0 and frame == 0:

numpy_data = np.delete(numpy_data, 0,0)

f.close()

For further information, I need this for learning a decision tree. Since my hdf file is bigger than my RAM, I think converting into a numpy array save memory and is therefore better suited.

Thanks for every input.