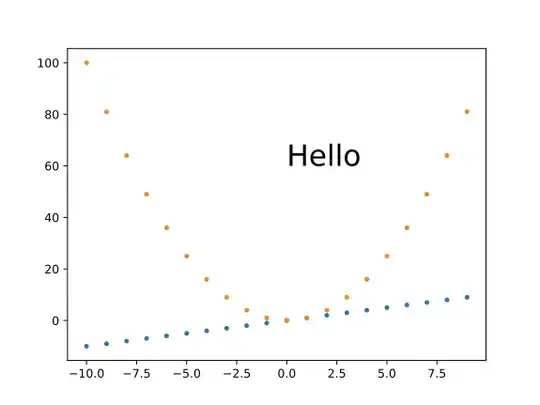

It looks like you have a distribution which we could model with this type of power function: y = a * b^(x). Assume that nonlinear regression does not exist, we can solve this problem with "linear regression" which probably uses the least squares method. We just need to transform the axes by calculating the logarithm of both sides of the equation. Again, we just don't know "a" & "b".

ln(y) = ln[a * b^(x)] # I'm using a natural logarithm (base e).

ln(y) = ln(a) + ln(b^x)

ln(y) = [ln(b)]*(x) + ln(a) <------> Y = m(X) + B,

where m = slope, and B = vertical-intercept. I used capital B so we wouldn't get confused.

Does that look like a linear equation to you now?

So now we transform the y-axis to loge(y), get linear regression statistics, raise "m" and "B" over the base of our logarithm which is e.

so e^[ln(b)] gives us "b", and ...

e^[ln(a] gives us a,

and then we know what y = a * b^(x) numerically.

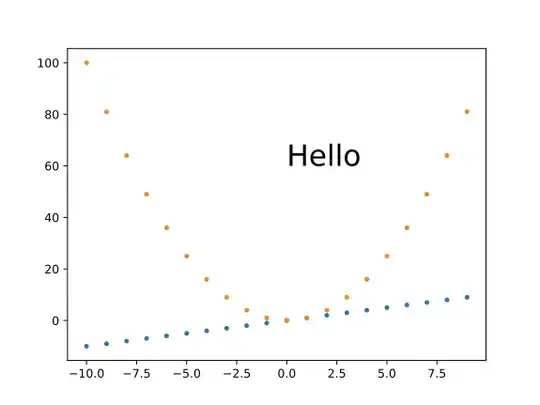

Let's calculate that. I'm going to eliminate 0 in "x" and "y". We will lose some precision, but as you know ln(0) = -infinity. We can't have that.

x <- 1:10

y <- c(1, 4, 9, 15, 26, 36.6, 50, 65, 81, 104)

Now it would be a good idea to check that we have the same number of singular variables in "x" and in "y", or else we can't graph the points.

length(x) == length(y)

1 TRUE

And "x" has 10 terms and so does "y" have 10 terms.

plot(y ~ x) # Let's see what the graph looks like. You already know.

Now transform it?

plot(log(y) ~ x))

Hmm, this doesn't look like a straight line after the transformation.

Therefore, it's not a power function. I was wrong. Again, this log is base e.

Let's try a double logarithmic plot.

plot(log(y) ~ log(x), main = "Double logarithmic plot to test for an exponential function", pch = 16, cex.main = 0.8)

It's a straight line, so we are in the clear. I was wrong about the power distribution. This exponential function fits the case that ...

y = a * x^(b),

which when calculating the logarithm of both sides of the equation, you get.

ln(y) = b[ln(x)] + ln(a)

so then: e^[ln(a)], where ln(a) is the vertical-intercept, = "a"

so then: b[ln(x)] or the logarithmic adjusted slope. We already have "e", no need to adjust it.

model <- lm(log(y) ~ log(x))

abline(model)

summary(model)

Call:

lm(formula = log(y) ~ log(x))

Residuals:

Min 1Q Median 3Q Max

-0.069478 -0.000490 0.005266 0.012249 0.031271

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -0.01376 0.02212 -0.622 0.551

log(x) 2.01349 0.01330 151.360 4.06e-15 ***

Signif. codes: 0 ‘’ 0.001 ‘’ 0.01 ‘’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 0.02925 on 8 degrees of freedom

Multiple R-squared: 0.9997, Adjusted R-squared: 0.9996

F-statistic: 2.291e+04 on 1 and 8 DF, p-value: 4.06e-15

so then in our y = a * x^(b) function, to get "a", we compute ...

exp(-0.01376)

1 0.9863342

plot(y ~ x, main = "Nonlinear Regression: y = 0.9863342 * x^(2.01349)", cex.main = 0.8)

Now, don't just trust me until you fit the curve for yourself.

curve(0.9863342 * x^(2.01349), col = "darkorchid3", add = TRUE)

So we have finally calculate that ...

y = a * x^(b) <----------> y = 0.9863342 * x^(2.01349),

so a = 0.9863342, and b = 2.01349

Technically, I did no nonlinear regression, no iterative guesses.

To give you a statistically correct answer I have to tell you that there are some standard errors from the least squares linear regression, and the errors adjusted in some way when I computed e^(-0.01376). But I've got a great fit.