newpred <- c(1, 0 ,0 ,1 ,0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 0, 1, 0,

0, 0, 1, 0, 1, 1, 0, 0, 1, 0, 1, 0, 0, 1, 1, 1, 0,0, 1, 0, 0,

0, 0,0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 1, 0, 0, 1, 0, 1, 1, 0,

0, 1, 0, 1, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 1, 1, 1, 1, 0, 0, 0,

1,0, 0, 0, 0, 0, 1, 0, 1, 1, 1, 1, 0, 0, 0, 1,

1, 1, 1, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 1, 0, 0)

newlab <- c(0, 0 ,0 ,0 ,0 ,0 ,0 ,1 ,0 ,0 ,0 ,0 ,0 ,0,

0, 0 ,0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0 ,0, 1, 1, 0, 0, 0, 0, 0, 0, 1,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 1 ,0, 0 ,0, 0 ,0, 0 ,1, 0 ,0, 0 ,0, 0 ,0,

0, 0 ,1 ,0 ,0 ,0 ,0 ,0 ,0 ,1,

0 ,1, 0 ,1, 0 ,0, 0 ,0, 0 ,0, 0 ,0, 0 ,0, 0 ,0)

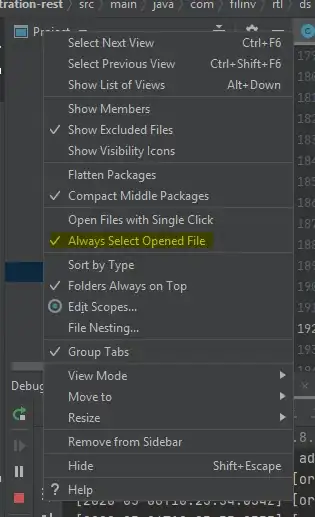

So the first vector are my predictions and the second vector is the reference. I don't understand why my curve looks like a V. I've never seen a ROC curve look like this! My advisor wants me to add points to make the graph smoother/more curved by adding more points. I tried to graph using pROC but the only arguments I could add were prediction and reference.

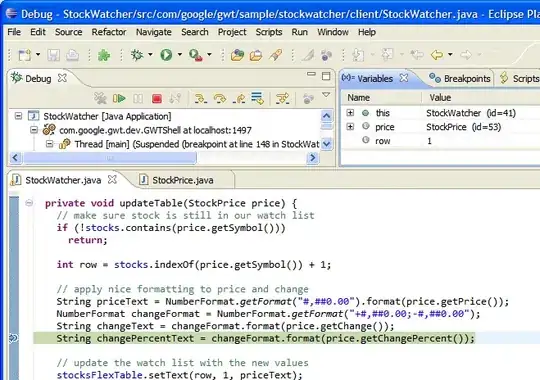

I also tried with ROCR

print.cutoffs.at=seq(0,1,by=0.1), text.adj=c(-0.2,1.7))

How do I smooth the curve or add more points?