I am trying to understand a quote "In presence of correlated variables, ridge regression might be the preferred choice. " Lets say we have variables a1,a2,b1,c2,and the 2 a"s are correlated . If we use Lasso it can eliminate one of a"s. Both Lasso and Ridge will do shrinkage. So it sounds Lasso could be better on these conditions. But quote says Ridge is better. Is this a wrong quote or I am missing something?(maybe thinking too simple)

-

Can you include a link to the quote? What's the context? – ilanman Mar 22 '17 at 13:10

3 Answers

The answer to this question largely depends on the type of dataset you are working on as well.

To provide a short answer to your question:

It is always good to have some regularization, so whenever possible, avoid "plain" linear regression. Ridge can be considered a good default regularization, however, if you consider that out of your feature set only a few features are actually useful, you should consider LASSO Regularization or alternatively, Elastic Net (explained bellow). These two methods tend to reduce "useless" feature weights to zero.

In a case like yours, were you presumably would have a lot of correlated features, you might be inclined to run one of these "zeroing" regularization methods. Both Elastic Net and LASSO could be used, however, Elastic Net is often preferred over LASSO as LASSO may behave erratically when you have a feature set that is larger than the number of instances in your training set or when several features are very strongly correlated (as in your case).

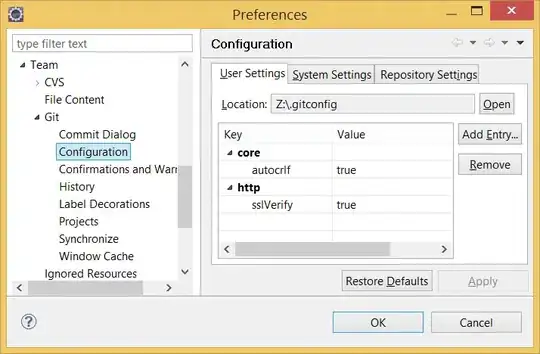

Elastic net regularization can be understood as a hybrid approach that blends both penalization of the L2 and L1 norms. Specifically, elastic net regression minimizes the cost function:

The mix ratio r hyper-parameter is between 0 and 1 and controls how much L2 or L1 penalization is used (0 is ridge, 1 is lasso).

Finally, Python Scikit-Learn's library made ElasticNet available for easy implementation. For instance:

from sklearn.linear_model import ElasticNet

elastic_net= ElasticNet(alpha= 0.1, l1_ratio= 0.5) # l1_ratio is the mix r

elastic_net.fit(X,y)

If you are looking into a more Mathematical Explanation of how the LASSO Regularization works when compared to Ridge Regularization I recommend you check out Aurelien Geron's book: Hands on Machine Learning or this resource by Stanford on Regularization (clear parallels to the MATLAB packages): https://web.stanford.edu/~hastie/glmnet/glmnet_alpha.html

Here is a python generated plot comparing the two penalties and cost functions:

On the LASSO we can observe that the Batch Gradient Descent path has a bit of bounce across the gutter towards the end. This is mostly due to the fact that the slope changes abruptly at O_2 = 0. The learning rate should be gradually reduced in order to converge to the global minimum (figure produced following Hands-on-ML guide

Hope that helps!

- 71

- 1

- 4

In general there isn't a preferred approach. LASSO will likely drive certain coefficients to 0, whereas Ridge will not but will shrink their values.

Also, Ridge is likely to be faster computationally because minimize the L2 norm is easier than the L1 norm (LASSO).

If possible, why not implement both approaches and perform cross-validation to see which yields better results?

Finally, I would also recommend looking into Elastic Net which is a sort of hybrid of LASSO and Ridge.

- 818

- 7

- 20

In general, one might expect the lasso to perform better in a setting where a relatively small number of predictors have substantial coefficients, and the remaining predictors have coefficients that are very small or that equal zero. Ridge regression will perform better when the response is a function of many predictors, all with coefficients of roughly equal size. However, the number of predictors that is related to the response is never known a priori for real data sets. A technique such as cross-validation can be used in order to determine which approach is better on a particular data set.

--ISL

- 1

- 1