I've got an RDD in Spark which I've cached. Before I cache it I repartition it. This works, and I can see in the storage tab in spark that it has the expected number of partitions.

This is what the stages look like on subsequent runs:

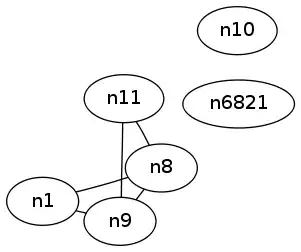

It's skipping a bunch of work which I've done to my cached RDD which is great. What I'm wondering though is why Stage 18 starts with a repartition. You can see that it's done at the end of Stage 17.

The steps I don in the code are:

List<Tuple2<String, Integer>> rawCounts = rdd

.flatMap(...)

.mapToPair(...)

.reduceByKey(...)

.collect();

To get the RDD, I grab it out of the session context. I also have to wrap it since I'm using Java:

JavaRDD<...> javaRdd = sc.emptyRDD();

return javaRdd.wrapRDD((RDD<...>)rdd);

Edit

I don't think this is specific to repartitioning. I've removed the repartitioning, and now I'm seeing some of the other operations I do prior to caching appearing after the skipped stages. E.g.

The green dot and everything before it should have already been worked out and cached.